😎 공부하는 징징알파카는 처음이지?

[DEEPNOID 원포인트레슨]_3_Classifcation 1. ResNet/DenseNet 본문

[DEEPNOID 원포인트레슨]_3_Classifcation 1. ResNet/DenseNet

징징알파카 2022. 1. 25. 11:36220125 작성

<본 블로그는 DEEPNOID 원포인트레슨을 참고해서 공부하며 작성하였습니다>

인공지능 | Deepnoid

DEEPNOID는 인공지능을 통한 인류의 건강과 삶의 질 향상을 기업이념으로 하고 있습니다. 딥노이드가 꿈꾸는 세상은, 의료 인공지능이 지금보다 훨씬 넓은 범위의 질환의 연구, 진단, 치료에 도움

www.deepnoid.com

1. ResNet

: Deep residual learning for image recognition

: Very Deep networks utilsing residual connection (Up to 152 layers)

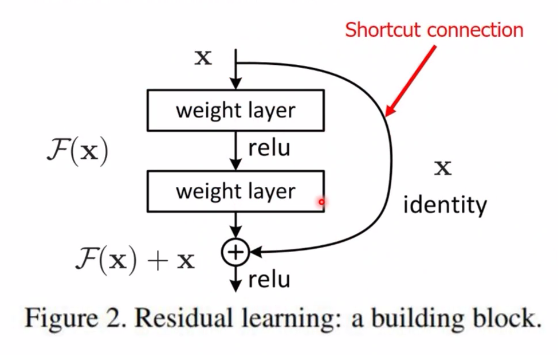

: Shortcut Connectionnnn

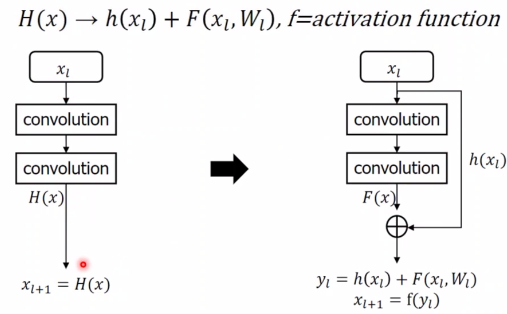

: Residual Learning

: 입력값을 출력값에 더해줄 수 있도록 지름길(shortcut)

: F(x) + x를 최소화하는 것을 목적

: x는 현시점에서 변할 수 없는 값

: F(x) = H(x) - x이므로 F(x)를 최소로 해준다는 것은 H(x) - x를 최소로 해주는 것과 동일한 의미

=> H(x) - x를 잔차(residual)

=> 잔차를 최소로 해주는 것이므로 ResNet

=> Skip connection적용하여 기울기소실문제 해결하면서, 매우 깊은 네트워크(152Layer) 학습하여 성능 상승

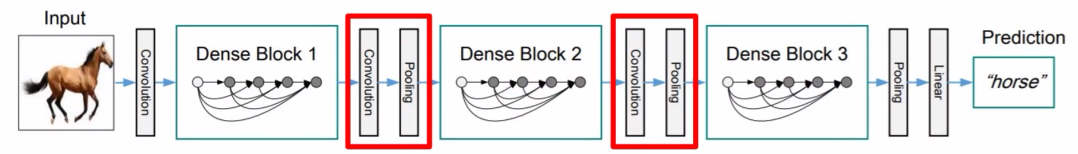

2. DenseNet

: To further improve model compactness

: Dense Connectivity

: Composite Function (BN-ReLU-Conv)

: Polling Layers

: Growth Rate

: Bottleneck Layers

: Compression

- 장점

: gradeint 문제 감소

: eas of feature propagation, feature reuse

: Regluarization effect

: high parameter efficiency

=> 진화된 Skip connection과 bottleneck layers를 적용하면서, Feature만 가진 매우 깊은 네트워크를 학습하여 성능 상승

1) Resnet의 Skip-connection에서 발전된 Dense-connection

2) Densenet에 Bottleneck layers를 적용

: layer마다 모든 이전 feature-map에 새로운 feature-map을 계속 concatenation

3) Transition Layer

다운샘플링

: feature map의 가로, 세로 사이즈를 줄여주고 feature map의 개수를 줄여줌

4) Classification Layer

fully connected layer를 사용하지 않으면서 파라미터 수 감소

좀 더 참고해서 공부해보아씁니당

https://warm-uk.tistory.com/46

[CNN 개념정리] CNN의 발전, 모델 요약정리 2 (ResNet, DenseNet)

* 참고자료 및 강의 - cs231n 우리말 해석 강의 https://www.youtube.com/watch?v=y1dBz6QPxBc&list=PL1Kb3QTCLIVtyOuMgyVgT-OeW0PYXl3j5&index=6 - Coursera, Andrew Ng교수님 인터넷 강의 * 목차 1. 모델 발전..

warm-uk.tistory.com

'👩💻 인공지능 (ML & DL) > ML & DL' 카테고리의 다른 글

| [DEEPNOID 원포인트레슨]_5_Detection 1. RCNN (0) | 2022.01.26 |

|---|---|

| [DEEPNOID 원포인트레슨]_4_Classifcation 2. MobileNet & EfficientNet (0) | 2022.01.26 |

| [음성]음성 처리 분야에서의 Deep Learning (0) | 2022.01.24 |

| [DEEPNOID 원포인트레슨]_2_딥러닝(CNN)의 이해 (0) | 2022.01.24 |

| [DEEPNOID 원포인트레슨]_1_머신러닝의 이해 (0) | 2022.01.24 |