😎 공부하는 징징알파카는 처음이지?

[DEEPNOID 원포인트레슨]_4_Classifcation 2. MobileNet & EfficientNet 본문

[DEEPNOID 원포인트레슨]_4_Classifcation 2. MobileNet & EfficientNet

징징알파카 2022. 1. 26. 10:41220125 작성

<본 블로그는 DEEPNOID 원포인트레슨을 참고해서 공부하며 작성하였습니다>

인공지능 | Deepnoid

DEEPNOID는 인공지능을 통한 인류의 건강과 삶의 질 향상을 기업이념으로 하고 있습니다. 딥노이드가 꿈꾸는 세상은, 의료 인공지능이 지금보다 훨씬 넓은 범위의 질환의 연구, 진단, 치료에 도움

www.deepnoid.com

1. MobileNet

: Mobile 기기에서도 돌아가기 위한 경량화가 핵심!

: 연산량 감소를 최우선으로 목표

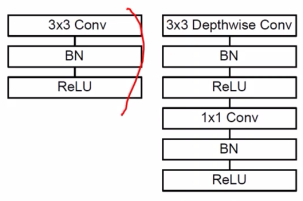

- Depthwise Separable Convolution

1) Depthwise Convolution

: 각각의 feature map 에 대해 1-channel Conv 연산 수행 후 Concat 형태로 각각 결과 feature map 채널별로 쌓음

2) Separable Convolution

: 채널별로 구해진 feature map 을 1x1 kernel Conv 연산으로 하나의 channel 로 합성

=> 각각 연산된 feature map 들이 하나의 영상으로 stack 된 후 1x1 kernel Conv으로 채널수만 바꿔주는 것

2. 기존 Conv 연산량 계산

: (Dk^2 * M) * Df^2 * (N * Dg^2)

- Dk : kernel size

- Df : input size

- Dg : output size

- M : input channel

- N : output channel

3. Depthwise Conv 연산량 계산

: (Dk^2 * 1) * Df^2 * (M * Dg^2)

- M = 1 : 1 channel 에 대해서만 독립적으로 Conv 연산 수행

- N = M : Depth Conv 결과 영상은 인풋 영상의 채널 수만큼 각각 연산 수행 결과를 concat으로 합침

4. Separable Conv 연산량 계산

: (1* M) * Df^2 * (N * Dg^2)

- Dk = 1 : 1x1 kernel convolution 수행하기 때문에 1 소거

- Df = Dg : 1x1 kernel convoluton 수행하기 때문에 Df, Dg 크기 변환 X

5. 기존 VS Depthwise Separable Conv

- 기존

= (Dk^2 * M) * Df^2 * (N * Dg^2)

- Depthwise Separable

=(M * Dk^2 * Df^2 * Dg^2) + (M*N*Df^2 * Dg^2)

= M * Df^2 * Dg^2 * (Dk^2 + N)

6. EfficientNet

: EfficientNet Baseline(MBconV) Block + Compound Scaling

성능 + 계산량 GOOD

1) Inverted residual block (Linear Bottleneck)

- residual blokc

: 채컬 수가 클 경우 1x1 Conv 채널 수를 줄인 후 3x3 conv 수행 후 다시 채널 늘린 뒤에 skip connection

- inverted residual block

: expansion layer로 채널을 반대로 늘린 후 conv, 다시 원래 채널 크기로 줄인 결과를 skip connection

- manifod

: 고차원 채널은 저차원에서 표현 가능

7. MBconV Block - SE Block

- squeeze

: fearure map 을 GAP(gloabl average pooling) 으로 압축

- excitation

: 각 채널별 중요도 연산 (relu + sigmoid)

=> SE Block : GAP + FC + ReLU + FC + Sigmoid

=> feature map 별로 중요도 계산, 클래스 분류에 더 정확한 정보 전달

8. EfficientNet Baseline Block : MBconV Block

- mobile net

: depthwise separable

: inverted residual

- SENet

: squeeze

: excitation

9. Compound Scaling

: 모델의 성능을 향상시킬 수 있는 최적의 Width, Depth, Resolution scaling

'👩💻 인공지능 (ML & DL) > ML & DL' 카테고리의 다른 글

| [DEEPNOID 원포인트레슨]_6_Segmentation 1. U-Net, attention (0) | 2022.01.26 |

|---|---|

| [DEEPNOID 원포인트레슨]_5_Detection 1. RCNN (0) | 2022.01.26 |

| [DEEPNOID 원포인트레슨]_3_Classifcation 1. ResNet/DenseNet (0) | 2022.01.25 |

| [음성]음성 처리 분야에서의 Deep Learning (0) | 2022.01.24 |

| [DEEPNOID 원포인트레슨]_2_딥러닝(CNN)의 이해 (0) | 2022.01.24 |