😎 공부하는 징징알파카는 처음이지?

[v0.31]영상처리_올바른 매칭점 찾기 본문

220117 작성

<본 블로그는 귀퉁이 서재님의 블로그를 참고해서 공부하며 작성하였습니다>

OpenCV - 29. 올바른 매칭점 찾기

이번 포스팅은 이전 포스팅의 후속 편입니다. 이전 포스팅에서는 특징 매칭에 대해 알아봤습니다. 그러나 잘못된 특징 매칭이 너무 많았습니다. 잘못된 특징 매칭은 제외하고 올바른 매칭점을

bkshin.tistory.com

1. 올바른 매칭점 찾기

match() 함수

: 모든 디스크립터를 하나하나 비교하여 매칭점을 찾는다

: 가장 작은 거리 값과 큰 거리 값의 상위 몇 퍼센트만 골라서 올바른 매칭점을 찾을 수 있음

# match 함수로부터 올바른 매칭점 찾기

import cv2, numpy as np

img1 = cv2.imread('img/taekwonv1.jpg')

img2 = cv2.imread('img/figures.jpg')

gray1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

gray2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# ORB로 서술자 추출 ---①

detector = cv2.ORB_create()

kp1, desc1 = detector.detectAndCompute(gray1, None)

kp2, desc2 = detector.detectAndCompute(gray2, None)

# BF-Hamming으로 매칭 ---②

matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

matches = matcher.match(desc1, desc2)

# 매칭 결과를 거리기준 오름차순으로 정렬 ---③

matches = sorted(matches, key=lambda x:x.distance)

# 최소 거리 값과 최대 거리 값 확보 ---④

min_dist, max_dist = matches[0].distance, matches[-1].distance

# 최소 거리의 15% 지점을 임계점으로 설정 ---⑤

ratio = 0.2

good_thresh = (max_dist - min_dist) * ratio + min_dist

# 임계점 보다 작은 매칭점만 좋은 매칭점으로 분류 ---⑥

good_matches = [m for m in matches if m.distance < good_thresh]

print('matches:%d/%d, min:%.2f, max:%.2f, thresh:%.2f' \

%(len(good_matches),len(matches), min_dist, max_dist, good_thresh))

# 좋은 매칭점만 그리기 ---⑦

res = cv2.drawMatches(img1, kp1, img2, kp2, good_matches, None, \

flags=cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS)

# 결과 출력

cv2.imshow('Good Match', res)

cv2.waitKey()

cv2.destroyAllWindows()

knnMatch() 함수

: 디스크립터당 k개의 최근접 이웃 매칭점을 가까운 순서대로 반환

: k개의 최근접 이웃 중 거리가 가까운 것은 좋은 매칭점이고,

거리가 먼 것은 좋지 않은 매칭점일 가능성이 높음

: 최근접 이웃 중 거리가 가까운 것 위주로 골라내면 좋은 매칭점을 찾을 수 있음

# knnMatch 함수로부터 올바른 매칭점 찾기

import cv2, numpy as np

img1 = cv2.imread('img/taekwonv1.jpg')

img2 = cv2.imread('img/figures.jpg')

gray1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

gray2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# ORB로 서술자 추출 ---①

detector = cv2.ORB_create()

kp1, desc1 = detector.detectAndCompute(gray1, None)

kp2, desc2 = detector.detectAndCompute(gray2, None)

# BF-Hamming 생성 ---②

matcher = cv2.BFMatcher(cv2.NORM_HAMMING2)

# knnMatch, k=2 ---③

matches = matcher.knnMatch(desc1, desc2, 2)

# 첫번재 이웃의 거리가 두 번째 이웃 거리의 75% 이내인 것만 추출---⑤

ratio = 0.75

good_matches = [first for first,second in matches \

if first.distance < second.distance * ratio]

print('matches:%d/%d' %(len(good_matches),len(matches)))

# 좋은 매칭만 그리기

res = cv2.drawMatches(img1, kp1, img2, kp2, good_matches, None, \

flags=cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS)

# 결과 출력

cv2.imshow('Matching', res)

cv2.waitKey()

cv2.destroyAllWindows()

- 최근접 이웃 중 거리가 가까운 것 중 75%만 고름

2. 매칭 영역 원근 변환

: 올바르게 매칭된 좌표들에 원근 변환 행렬을 구하면 매칭 되는 물체가 어디 있는지 표시

: 비교하려는 물체가 두 사진 상에서 약간 회전했을 수도 있고 크기가 조금 다를 수도 있음

: 원근 변환 행렬을 구하면 찾고자 하는 물체의 위치를 잘 찾을 수 있음

: 원근 변환 행렬에 들어맞지 않는 매칭점을 구분할 수 있어서 나쁜 매칭점을 한번 더 제거 가능

mtrx, mask = cv2.findHomography(srcPoints, dstPoints, method, ransacReprojThreshold, mask, maxIters, confidence)

: 여러 매칭점으로 원근 변환 행렬을 구하는 함수

- srcPoints : 원본 좌표 배열

- dstPoints : 결과 좌표 배열

- method=0(optional) : 근사 계산 알고리즘 선택

- 0 : 모든 점으로 최소 제곱 오차 계산

- cv2.RANSAC : 모든 좌표를 사용X, 임의의 좌표만 선정해서 만족도 구함, 정상치, 이상치를 구분하는 mask 반환

- cv2.LMEDS : 제곱의 최소 중간값을 사용, 정상치가 50% 이상인 경우에만 정상적으로 작동

- cv2.RHO : 이상치가 많은 경우에 더 빠름

- ransacReprojThreshold=3(optional) : 정상치 거리 임계 값(RANSAC, RHO인 경우)

- maxIters=2000(optional) : 근사 계산 반복 횟수

- confidence=0.995(optional) : 신뢰도(0~1의 값)

- mtrx : 결과 변환 행렬

- mask : 정상치 판별 결과, N x 1 배열 (0: 비정상치, 1: 정상치)

dst = cv2.perspectiveTransform(src, m, dst)

: 원래 좌표들을 원근 변환 행렬로 변환하는 함수

- src : 입력 좌표 배열

- m : 변환 배열

- dst(optional) : 출력 좌표 배열

: cv2.getPerspectiveTransform()은 4개의 꼭짓점으로 정확한 원근 변환 행렬을 반환하지만,

: cv2.findHomography()는 여러 개의 점으로 근사 계산한 원근 변환 행렬을 반환

: cv2.perspectiveTransform() 함수는 원근 변환할 새로운 좌표 배열을 반환

# 매칭점 원근 변환으로 영역 찾기

import cv2, numpy as np

img1 = cv2.imread('img/taekwonv1.jpg')

img2 = cv2.imread('img/figures.jpg')

gray1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

gray2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# ORB, BF-Hamming 로 knnMatch ---①

detector = cv2.ORB_create()

kp1, desc1 = detector.detectAndCompute(gray1, None)

kp2, desc2 = detector.detectAndCompute(gray2, None)

matcher = cv2.BFMatcher(cv2.NORM_HAMMING2)

matches = matcher.knnMatch(desc1, desc2, 2)

# 이웃 거리의 75%로 좋은 매칭점 추출---②

ratio = 0.75

good_matches = [first for first,second in matches \

if first.distance < second.distance * ratio]

print('good matches:%d/%d' %(len(good_matches),len(matches)))

# 좋은 매칭점의 queryIdx로 원본 영상의 좌표 구하기 ---③

src_pts = np.float32([ kp1[m.queryIdx].pt for m in good_matches ])

# 좋은 매칭점의 trainIdx로 대상 영상의 좌표 구하기 ---④

dst_pts = np.float32([ kp2[m.trainIdx].pt for m in good_matches ])

# 원근 변환 행렬 구하기 ---⑤

mtrx, mask = cv2.findHomography(src_pts, dst_pts)

# 원본 영상 크기로 변환 영역 좌표 생성 ---⑥

h,w, = img1.shape[:2]

pts = np.float32([ [[0,0]],[[0,h-1]],[[w-1,h-1]],[[w-1,0]] ])

# 원본 영상 좌표를 원근 변환 ---⑦

dst = cv2.perspectiveTransform(pts,mtrx)

# 변환 좌표 영역을 대상 영상에 그리기 ---⑧

img2 = cv2.polylines(img2,[np.int32(dst)],True,255,3, cv2.LINE_AA)

# 좋은 매칭 그려서 출력 ---⑨

res = cv2.drawMatches(img1, kp1, img2, kp2, good_matches, None, \

flags=cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS)

cv2.imshow('Matching Homography', res)

cv2.waitKey()

cv2.destroyAllWindows()

: 올바른 매칭점을 활용해 원근 변환 행렬을 구하고, 원본 이미지 크기만큼의 사각형 도형을 원근 변환하여 결과 이미지에 표시

+) good_matches는 knnMatch() 함수의 반환 결과

: match(), knnMatch(), radiusMatch() 함수의 반환 결과는 DMatch 객체 리스트

DMatch

: 매칭 결과를 표현하는 객체

- queryIdx : queryDescriptors의 인덱스

- trainIdx : trainDescriptors의 인덱스

- imgIdx : trainDescriptor의 이미지 인덱스

- distance : 유사도 거리

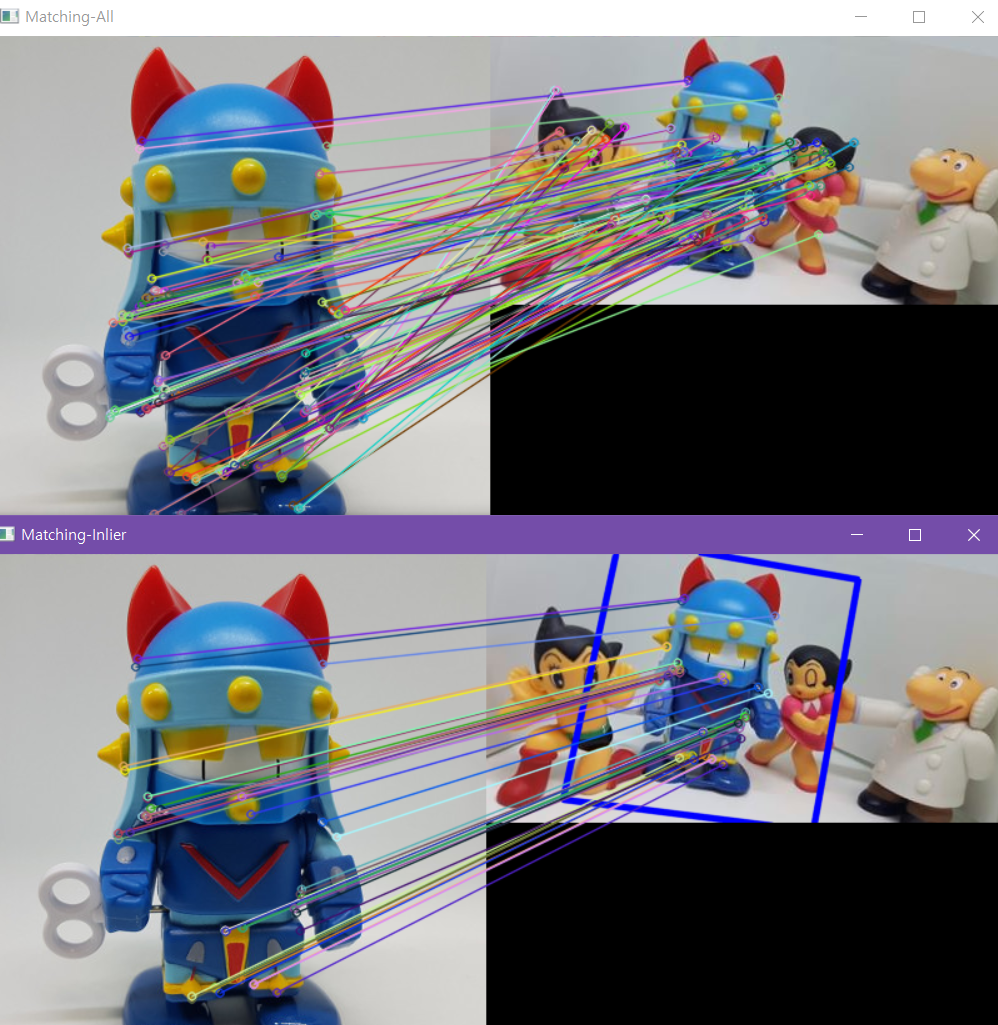

+) RANSAC 원근 변환 근사 계산으로 잘못된 매칭을 추가로 제거

# RANSAC 원근 변환 근사 계산으로 나쁜 매칭 제거

import cv2, numpy as np

img1 = cv2.imread('img/taekwonv1.jpg')

img2 = cv2.imread('img/figures2.jpg')

gray1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

gray2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# ORB, BF-Hamming 로 knnMatch ---①

detector = cv2.ORB_create()

kp1, desc1 = detector.detectAndCompute(gray1, None)

kp2, desc2 = detector.detectAndCompute(gray2, None)

matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

matches = matcher.match(desc1, desc2)

# 매칭 결과를 거리기준 오름차순으로 정렬 ---③

matches = sorted(matches, key=lambda x:x.distance)

# 모든 매칭점 그리기 ---④

res1 = cv2.drawMatches(img1, kp1, img2, kp2, matches, None, \

flags=cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS)

# 매칭점으로 원근 변환 및 영역 표시 ---⑤

src_pts = np.float32([ kp1[m.queryIdx].pt for m in matches ])

dst_pts = np.float32([ kp2[m.trainIdx].pt for m in matches ])

# RANSAC으로 변환 행렬 근사 계산 ---⑥

mtrx, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

h,w = img1.shape[:2]

pts = np.float32([ [[0,0]],[[0,h-1]],[[w-1,h-1]],[[w-1,0]] ])

dst = cv2.perspectiveTransform(pts,mtrx)

img2 = cv2.polylines(img2,[np.int32(dst)],True,255,3, cv2.LINE_AA)

# 정상치 매칭만 그리기 ---⑦

matchesMask = mask.ravel().tolist()

res2 = cv2.drawMatches(img1, kp1, img2, kp2, matches, None, \

matchesMask = matchesMask,

flags=cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS)

# 모든 매칭점과 정상치 비율 ---⑧

accuracy=float(mask.sum()) / mask.size

print("accuracy: %d/%d(%.2f%%)"% (mask.sum(), mask.size, accuracy))

# 결과 출력

cv2.imshow('Matching-All', res1)

cv2.imshow('Matching-Inlier ', res2)

cv2.waitKey()

cv2.destroyAllWindows()

- 원근 변환 행렬을 구할 때 RANSAC을 사용했고, 그 결과인 mask를 활용하여 잘못된 매칭점을 제거

- mask에는 입력 좌표와 동일한 인덱스에 정상치에는 1, 이상치에는 0이 표시

어렵당,,,

'👩💻 IoT (Embedded) > Image Processing' 카테고리의 다른 글

| [v0.33]영상처리_광학 흐름(Optical Flow) (0) | 2022.01.18 |

|---|---|

| [v0.32]영상처리_배경 제거 (0) | 2022.01.18 |

| [v0.30]영상처리_특징 매칭(Feature Matching) (0) | 2022.01.16 |

| [v0.29]영상처리_특징 디스크립터 검출기 (0) | 2022.01.15 |

| [v0.28]영상처리_이미지의 특징점, 특징점 검출기 (0) | 2022.01.15 |