😎 공부하는 징징알파카는 처음이지?

[Kaggle] CNN Architectures 본문

220204 작성

<본 블로그는 Kaggle 을 참고해서 공부하며 작성하였습니다>

https://www.kaggle.com/shivamb/cnn-architectures-vgg-resnet-inception-tl

CNN Architectures : VGG, ResNet, Inception + TL

Explore and run machine learning code with Kaggle Notebooks | Using data from multiple data sources

www.kaggle.com

https://wooono.tistory.com/233

[DL] LeNet-5, AlexNet, VGG-16, ResNet, Inception Network

CNN 종류는 다음과 같습니다. Classic Networks LeNet-5 AlexNet VGG-16 ResNet Inception(GoogLeNet) Network 들어가기 앞서, 입력 이미지 크기로부터 출력 이미지 크기를 추론하는 공식은 Convolution layer와 P..

wooono.tistory.com

1. CNN Architectures

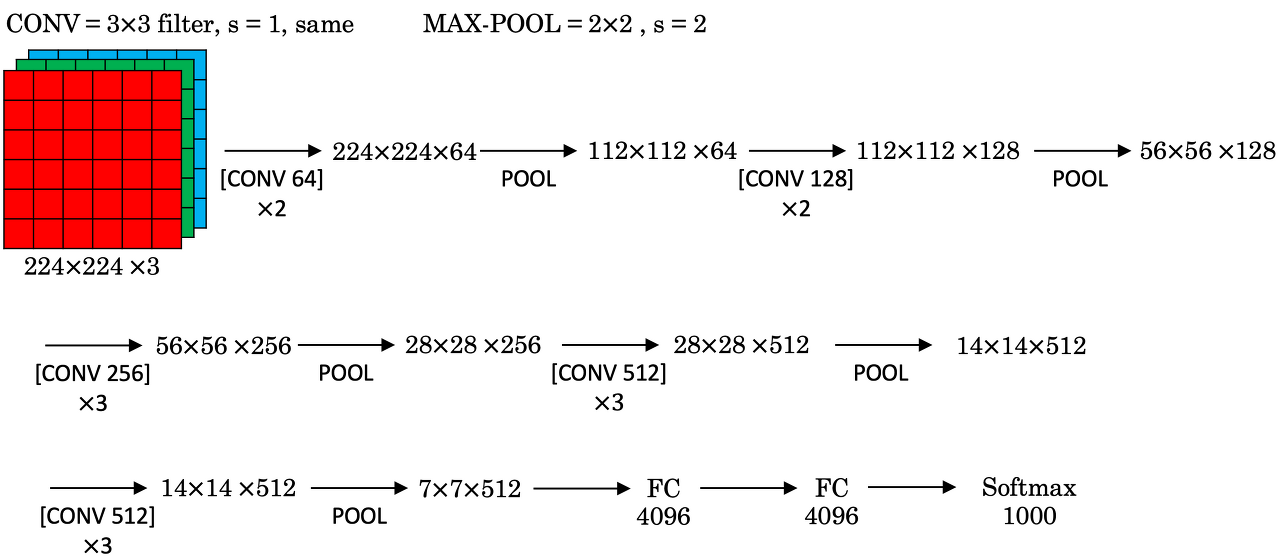

- VGG16

: VGG16 은 많은 파라미터를 가지며, 단순함

: Convolution Layer (filter = (3, 3), stride = 1, padding = same), Pooling Layer (filter = (2, 2), stride = 2)를 반복적 사용

: 이미지의 채널은 Convolution Layer마다 2배씩 증가하며, 이미지 크기는 Pooling Layer마다 2배씩 감소

: 특징

- Max Pooling을 사용

- 활성화 함수로 ReLU를 사용

- 16개의 Layer를 사용하기 때문에 VGG-16

: 단점

- Fully Connected Layer에서 parameter 수가 많음

- 상당한 memory cost, overfitting 문제를 야기

from keras.layers import Input, Conv2D, MaxPooling2D

from keras.layers import Dense, Flatten

from keras.models import Model

_input = Input((224,224,1))

conv1 = Conv2D(filters=64, kernel_size=(3,3), padding="same", activation="relu")(_input)

conv2 = Conv2D(filters=64, kernel_size=(3,3), padding="same", activation="relu")(conv1)

pool1 = MaxPooling2D((2, 2))(conv2)

conv3 = Conv2D(filters=128, kernel_size=(3,3), padding="same", activation="relu")(pool1)

conv4 = Conv2D(filters=128, kernel_size=(3,3), padding="same", activation="relu")(conv3)

pool2 = MaxPooling2D((2, 2))(conv4)

conv5 = Conv2D(filters=256, kernel_size=(3,3), padding="same", activation="relu")(pool2)

conv6 = Conv2D(filters=256, kernel_size=(3,3), padding="same", activation="relu")(conv5)

conv7 = Conv2D(filters=256, kernel_size=(3,3), padding="same", activation="relu")(conv6)

pool3 = MaxPooling2D((2, 2))(conv7)

conv8 = Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu")(pool3)

conv9 = Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu")(conv8)

conv10 = Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu")(conv9)

pool4 = MaxPooling2D((2, 2))(conv10)

conv11 = Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu")(pool4)

conv12 = Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu")(conv11)

conv13 = Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu")(conv12)

pool5 = MaxPooling2D((2, 2))(conv13)

flat = Flatten()(pool5)

dense1 = Dense(4096, activation="relu")(flat)

dense2 = Dense(4096, activation="relu")(dense1)

output = Dense(1000, activation="softmax")(dense2)

vgg16_model = Model(inputs=_input, outputs=output)from keras.applications.vgg16 import decode_predictions

from keras.applications.vgg16 import preprocess_input

from keras.preprocessing import image

import matplotlib.pyplot as plt

from PIL import Image

import seaborn as sns

import pandas as pd

import numpy as np

import os

img1 = "dogs-vs-cats-redux-kernels-edition/train/cat.11679.jpg"

img2 = "dogs-vs-cats-redux-kernels-edition/train/dog.2811.jpg"

img3 = "dogs-vs-cats-redux-kernels-edition/train/cat.11679.jpg"

img4 = "dogs-vs-cats-redux-kernels-edition/train/dog.2811.jpg"

imgs = [img1, img2, img3, img4]

def _load_image(img_path):

img = image.load_img(img_path, target_size=(224, 224))

img = image.img_to_array(img)

img = np.expand_dims(img, axis=0)

img = preprocess_input(img)

return img

def _get_predictions(_model):

f, ax = plt.subplots(1, 4)

f.set_size_inches(80, 40)

for i in range(4):

ax[i].imshow(Image.open(imgs[i]).resize((200, 200), Image.ANTIALIAS))

plt.show()

f, axes = plt.subplots(1, 4)

f.set_size_inches(80, 20)

for i,img_path in enumerate(imgs):

img = _load_image(img_path)

preds = decode_predictions(_model.predict(img), top=3)[0]

b = sns.barplot(y=[c[1] for c in preds], x=[c[2] for c in preds], color="gray", ax=axes[i])

b.tick_params(labelsize=55)

f.tight_layout()from keras.applications.vgg16 import VGG16

vgg16_weights = 'dogs-vs-cats-redux-kernels-edition/vgg16_weights_tf_dim_ordering_tf_kernels.h5'

vgg16_model = VGG16(weights=vgg16_weights)

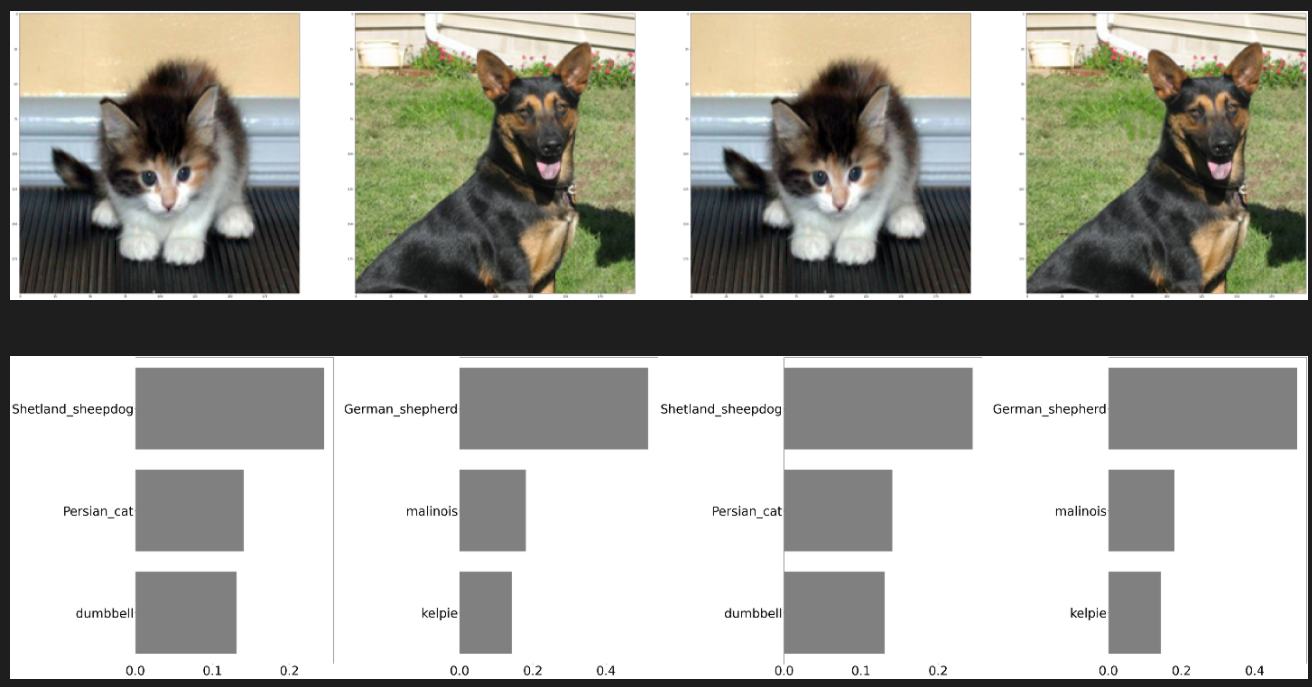

_get_predictions(vgg16_model)

- VGG19

: VGG19는 19개의 레이어 가짐

: 가장 많은 파라미터의 수를 가지고 있고 기본적인 틀은 VGG16의 특징과 비슷하며, Conv 레이어가 3개 추가됨

from keras.applications.vgg19 import VGG19

vgg19_weights = 'dogs-vs-cats-redux-kernels-edition/vgg19_weights_tf_dim_ordering_tf_kernels.h5'

vgg19_model = VGG19(weights=vgg19_weights)

_get_predictions(vgg19_model)

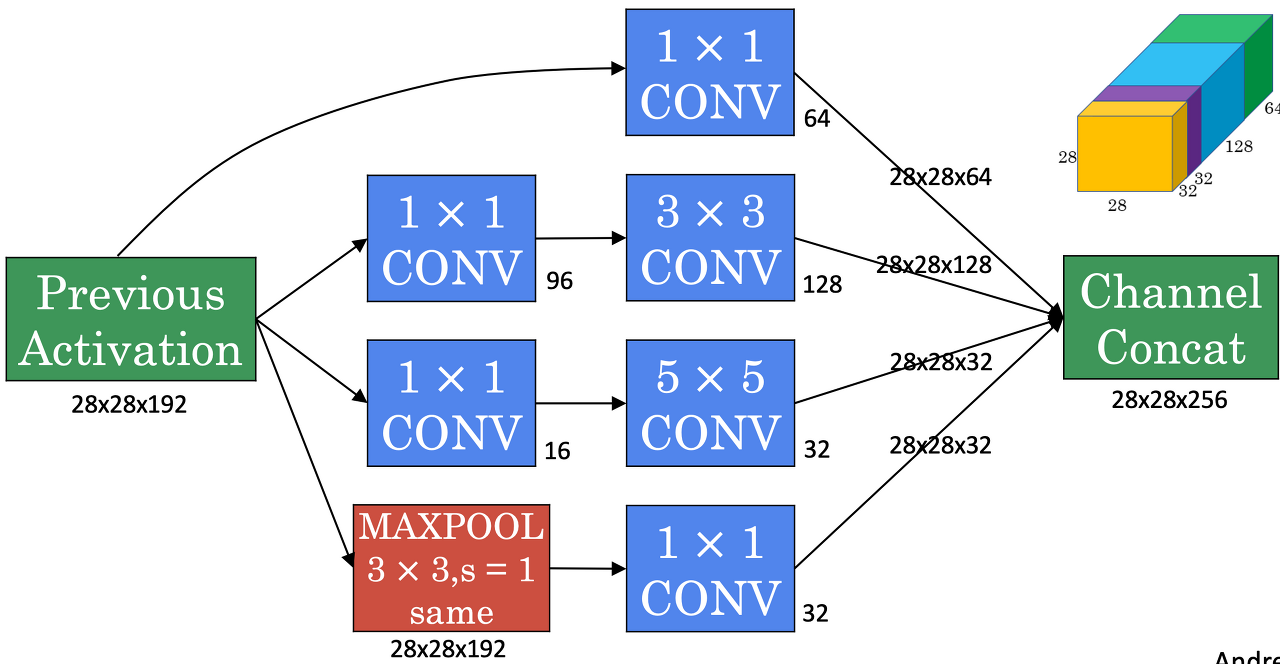

- InceptionNet

1) 1 x 1 convolution

=> 볼륨의 크기는 그대로 둔채, 채널을 줄이기

=> (1, 1, channel) convolutuion 사용해서 채널 줄이기

=> 1x1 convolution 사용하면 채널의 수 줄이고, 유지하고, 늘릴 수 있음

2) Inception Module

: input 볼륨에 대해 Convolution(1x1, 3x3, 5x5)과 Max-Pooling(3x3)을 각각 수행해서 output에 쌓아올리기

: 파라미터와 필터 사이즈 조합을 모두 학습

=> 1x1 Conv, 1x1 Conv -> 3x3 Conv, 1x1 Conv ->5x5 Conv, MAXPOOL -> 1x1 Conv

=> MAXPOOL layer 뒤에 1x1 Conv layer가 오는 것에 유의

=> MAXPOOL은 channel 수를 감소시킬 수 없어서, 1x1 Conv를 통해 channel 수를 줄임

=> layer에 1x1 Convolution layer를 추가해 bottlenect layer를 구현함으로써, channel 수를 감소, 연산량을 줄임

( Conv 연산 중간에 (1, 1) Conv 연산을 추가를 bottleneck layer(병목층))

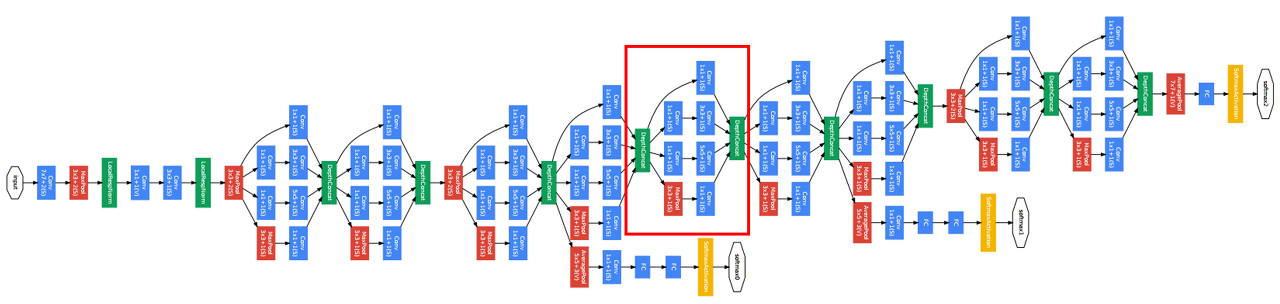

3) Inception (GoogleNet) Network

: Inception Module 집합

: 모델 사이에는 Max Pooling이 끼워져 있음

: 모델의 마지막에는 Fully connected layer로 결과값을 출력

: 중간에 softmax layer가 추가로 달려있는데, 이는 파라미터가 잘 업데이트되도록, output의 성능이 나쁘지않게 도와줌

: Regularization 효과를 얻을 수 있고, overfitting을 방지

- Resnet

: 마이크로소프트 개발, 깊은 신경망 ( Vanishing Gradient, Exploding Gradient 문제 발생 )

: Residual block 도입

: l+2번째 비선형 함수 입력값에 l 번째 비선형 함수 출력값을 더해줄 수 있도록 지름길(shortcut)을 하나 만듦

: 기본적으로 VGG19 구조 따름

: 컨볼루션 층들 추가해서 깊게 만들고, shoutcut 추가

: 34 개의 layer 을 가진 34-layer residual 네트워크와 shortcut 제외 버전인 34-layer plain 구조

: 처음을 제외하고 3*3 convolution layer 균일 사용

: 이미지 크기가 반으로 줄어들면, 채널 크기 2배로 늘림

- XceptionNet

: Extreme version of Inception module

: Depthwise separable convolution ( 각 채널별로 conv 연산하고, 그 결과에 1x1 conv 연산 취함 ) 수정함

: channel, spatial convolution 을 depthwise separable convolution 으로 완벽하게 분리하자

2. Image Feature Extraction

vgg1616 = VGG16(weights="imagenet", include_top=False)def _get_features(img_path):

img = image.load_img(img_path, target_size=(224, 224))

img_data = image.img_to_array(img)

img_data = np.expand_dims(img_data, axis=0)

img_data = preprocess_input(img_data)

resnet_features = vgg1616.predict(img_data)

return resnet_features

img_path = "dogs-vs-cats-redux-kernels-edition/train/dog.2811.jpg"

vgg16_features = _get_features(img_path)features_representation_1 = vgg16_features.flatten()

features_representation_2 = vgg16_features.squeeze()

print ("Shape 1: ", features_representation_1.shape)

print ("Shape 2: ", features_representation_2.shape)

3. Transfer Learning

basepath = "dogs-vs-cats-redux-kernels-edition/train/"

class1 = os.listdir(basepath + "dog/")

class2 = os.listdir(basepath + "cat/")

data = {'dog': class1[:10],

'cat': class2[:10],

'test': [class1[11], class2[11]]}features = {"dog" : [], "cat" : [], "test" : []}

testimgs = []

for label, val in data.items():

for k, each in enumerate(val):

if label == "test" and k == 0:

img_path = basepath + "/dog/" + each

testimgs.append(img_path)

elif label == "test" and k == 1:

img_path = basepath + "/cat/" + each

testimgs.append(img_path)

else:

img_path = basepath + label.title() + "/" + each

feats = _get_features(img_path)

features[label].append(feats.flatten())dataset = pd.DataFrame()

for label, feats in features.items():

temp_df = pd.DataFrame(feats)

temp_df['label'] = label

dataset = dataset.append(temp_df, ignore_index=True)

dataset.head()

y = dataset[dataset.label != 'test'].label

X = dataset[dataset.label != 'test'].drop('label', axis=1)from sklearn.feature_selection import VarianceThreshold

from sklearn.neural_network import MLPClassifier

from sklearn.pipeline import Pipeline

model = MLPClassifier(hidden_layer_sizes=(100, 10))

pipeline = Pipeline([('low_variance_filter', VarianceThreshold()), ('model', model)])

pipeline.fit(X, y)

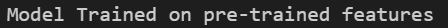

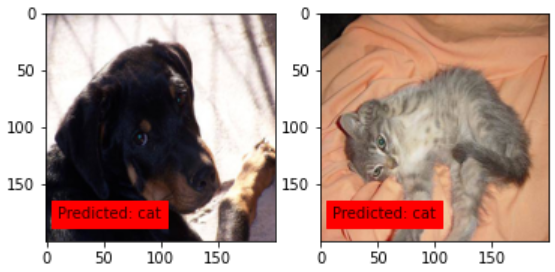

print ("Model Trained on pre-trained features")

preds = pipeline.predict(features['test'])

f, ax = plt.subplots(1, 2)

for i in range(2):

ax[i].imshow(Image.open(testimgs[i]).resize((200, 200), Image.ANTIALIAS))

ax[i].text(10, 180, 'Predicted: %s' % preds[i], color='k', backgroundcolor='red', alpha=0.8)

plt.show()

dog, cat 폴더가 따로 없어서,,,, 개인적으로

91개 씩 옮겨서.... train 했땅

데이터 양이 부족해서,, 개 예측을 잘못 한ㄷ스 ㅜㅡㅜ

그리궁 vgg 말고 다른 모델들은

ㄷ에러가 났땽,,, why??

'👩💻 컴퓨터 구조 > Kaggle' 카테고리의 다른 글

| [Kaggle]Pneumonia/Normal Classification(CNN) (0) | 2022.03.20 |

|---|---|

| [Kaggle]Super Image Resolution_고화질 이미지 만들기 (0) | 2022.02.07 |

| [Kaggle] HeartAttack 예측 (0) | 2022.01.31 |

| [Kaggle] Chest X-Ray 폐암 이미지 분류하기 (0) | 2022.01.29 |

| [Kaggle]Breast Cancer Wisconsin (Diagnostic) Data Set_유방암 분류 (0) | 2022.01.28 |