😎 공부하는 징징알파카는 처음이지?

[DACON] 동서발전 태양광 발전량 예측 AI 경진대회 본문

728x90

반응형

220914 작성

<본 블로그는 dacon 대회에서의 Baseline 와 haenara-shin 님의 깃허브ㄹ,ㄹ 참고해서 공부하며 작성하였습니다 :-) >

GitHub - haenara-shin/DACON: DACON competition code repos.

DACON competition code repos. Contribute to haenara-shin/DACON development by creating an account on GitHub.

github.com

https://dacon.io/competitions/official/235720/codeshare/2512?page=1&dtype=recent

[Public LB-9.4531] Baseline Code / LightGBM (수정)

동서발전 태양광 발전량 예측 AI 경진대회

dacon.io

😎 1. 대회 소개

태양광 발전은 매일 기상 상황과 계절에 따른 일사량의 영향을 받는다

이에 대한 예측이 가능하다면 보다 원활한 전력 수급 계획이 가능하다

인공지능 기반 태양광 발전량 예측 모델을 만들어보자

- 태양광 발전은 매일의 '기상 상황'과 계절에 따른 '일사량'의 영향을 받음

- Input-dataset : 7일(Day 0 ~ Day 6) 동안의 데이터

- Prediction(Target) : 향후 2일(Day 7 ~ Day 8) 동안의 30분 간격의 발전량 예측. (1일당 48개씩 총 96개 timestep에 대한 예측)

😎 2. 데이터 구성

- dataset

- train.csv : 3년(Day 0 ~ Day 1094) 동안의 기상 데이터, 발전량(target) 데이터

- test fold files : 정답용 데이터

- (81개 - 2년 동안의 기상 데이터, 발전량(target)데이터. 순서는 랜덤, 시계열 순서와 무관.

- 각 파일의 7일 동안의 데이터 전체 혹은 일부를 input 으로 사용해서, 향후 2일 동안의 30분 간격의 발전량(target)을 예측(1일당 48개씩 총 96개의 timestep 예측)

- submission.csv : 정답 제출 파일

- test 폴더의 각 파일에 대해서, 시간대별 발전량을 9개의 quantile(0.1, 0.2 ... 0.9) 에 맞춰 예측. '파일명_날짜_시간' 형식

- Loss

- Pinball loss

- 50% 이상의 백분위수에서 과소 예측하면 페널티 크게 먹임

- 높은 quantile 값에서는 측정된 값이 예측값 보다 낮아야 함. 즉, over-forecast 유도

- 50% 미만의 백분위수에서 과대예측할 경우, 페널티 크게 먹임

- 낮은 quantile 값에서는 측정된 값이 예측값 보다 높아야 함. 즉, under-forecast 유도

- 50% 이상의 백분위수에서 과소 예측하면 페널티 크게 먹임

- Pinball loss

- QR

- percentile(백분위수) : 크기가 있는 값들로 이뤄진 자료를 순서대로 나열했을 때 백분율로 나타낸 특정 위치의 '값'. 작은 값(0) 부터 큰 값(100)까지 매김

- percentile rank(백분위) : 자료의 특정 값이 전체에서 어느 '위치'에 있는지

- quantile(사분위) : 0.25씩 끊어서 생각하면 됨

- 예측 값의 범위를 제공해서 더 '안정적이거나 믿을 만한' 예측 가이드를 제공할 수 있음

- QR(Quantile Regression)로 예측 구간을 나눠서 예측하는게 더 '믿을만하거나 안정적임'

- QR이 OLS(일반 최소 제곱 모델, 평균 추정치만 제공) 보다 의미 있는 이유

- QR은 목표 변수의 전체 조건부 분포를 모형화 할 수 있음(OLS는 평균 추정치만 제공)

- QR은 목표 분포에 대해서 가정을 하지 않기 때문에 에러(오차) 분포의 mis-specification에 좀 더 강함

- QR은 outlier에 덜 민감함

- QR은 monotonic transformation(log와 같은것)에 불편임

😎 3. 코드 구현

1️⃣ Package load

import pandas as pd

# imort cupy as cp

import numpy as np

import os

import random

import math

from scipy.optimize import curve_fit # Use non-linear least squares to fit a function - for the zenith angle calculation

import warnings

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

warnings.filterwarnings("ignore")

from sklearn.model_selection import train_test_split

import tensorflow as tf

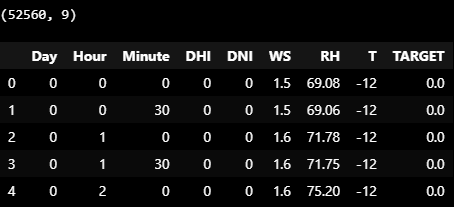

import tensorflow_addons as tfatrain = pd.read_csv('data/train/train.csv')

print(train.shape)

train.head()

-

Day : 날짜

-

Hour : 시간

-

Minute : 분

-

DHI : 수평면 산란일사량(Diffuse Horizontal Irradiance (W/m2))

-

DNI : 직달일사량(Direct Normal Irradiance (W/m2))

-

WS : 풍속(Wind Speed (m/s))

-

RH : 상대습도(Relative Humidity (%))

-

T : 기온(Temperature (Degree C))

-

Target : 태양광 발전량 (kW)

train.describe()

train.info()

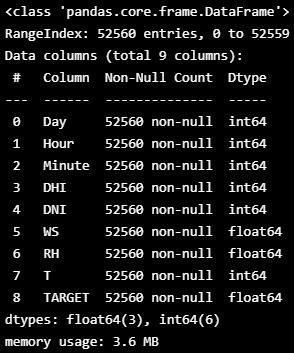

- plot feature data distribution

## plot feature data distribution

fig, ax = plt.subplots(2, train.shape[1]//2+1, figsize=(20, 6))

for idx, feature in enumerate(train.columns):

data = train[feature]

if idx<train.shape[1]//2 + 1:

ax[0,idx].hist(train.iloc[:,idx], bins=10, alpha=0.5)

ax[0,idx].set_title(train.columns[idx])

else:

ax[1,idx-train.shape[1]//2-1].hist(train.iloc[:,idx], bins=10, alpha=0.5)

ax[1,idx-train.shape[1]//2-1].set_title(train.columns[idx])

plt.show()

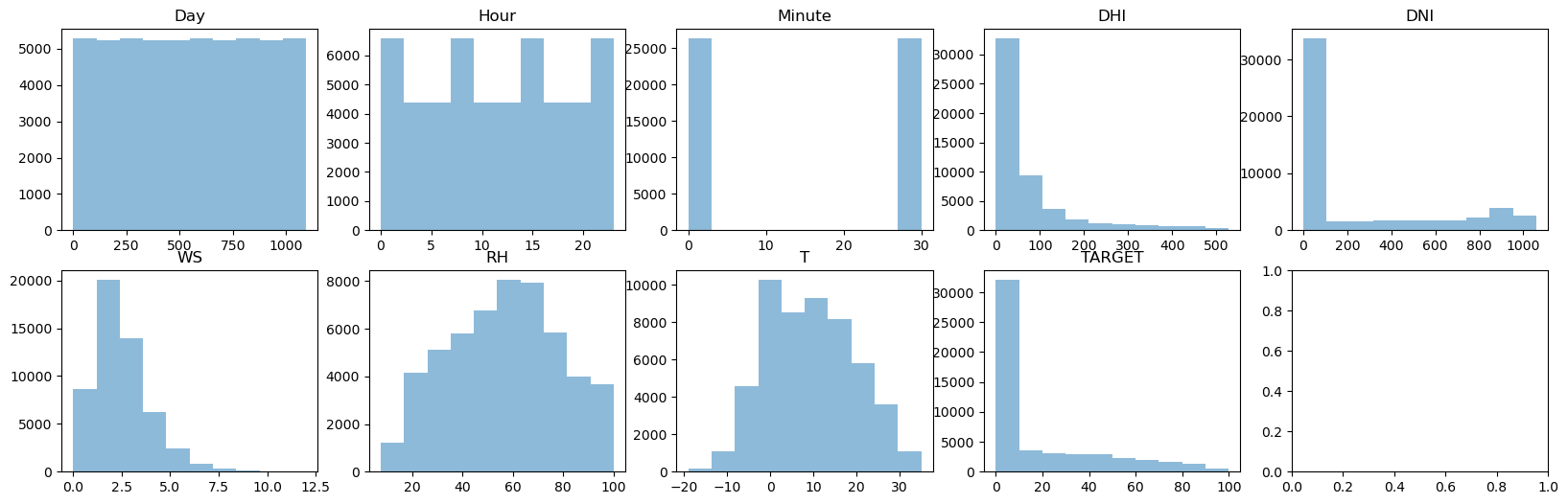

- target에 대한 feature(x)들의 상관 관계(correlation)

fig, axes = plt.subplots(2, 3, figsize=(10,7))

train.plot(x='Hour', y='TARGET', kind='scatter', alpha=0.1, ax=axes[0,0])

train.plot(x='DHI', y='TARGET', kind='scatter', alpha=0.1, ax=axes[0,1])

train.plot(x='DNI', y='TARGET', kind='scatter', alpha=0.1, ax=axes[0,2])

train.plot(x='WS', y='TARGET', kind='scatter', alpha=0.1, ax=axes[1,0])

train.plot(x='RH', y='TARGET', kind='scatter', alpha=0.1, ax=axes[1,1])

train.plot(x='T', y='TARGET', kind='scatter', alpha=0.1, ax=axes[1,2])

fig.tight_layout()

2️⃣ Data preprocessing (feature engineering)

- Hour과 Minute를 1개 항목으로(float) 병합, day 항목 제거 후 삼각함수(sin, cos) 함수를 이용해서 시간을 연속적인 형태로 표현

- 가장 마지막 3, 5일 동시간 Target값의 평균

- 기온과 상대습도를 이용한 이슬점 산출

-

기온과 상대 습도 자체를 feature에 넣지 않고, 이슬점(결로 현상 발생 지점)을 새로운 feature로 구하는게 더 합리적

-

- 먼지, 습도 및 풍속이 태양전지 효율에 미치는 영향

- 일출/일몰 시간 추축

-

DHI > 0 기준으로 예측

- 일출/일몰 시간에 따른 연간, 일별 계절성(seasonality)를 고려한 일별 2차 함수 근사를 통해 zenith angle 산출

- zenith angle과 DNI DHI를 이용한 GHI 산출

- 국내 태양광 집광판은 고정형 이기 때문에 GHI(Global Horizontal Irradiance, 수평명 전 일사량)를 알아야 함

- 태양에너지 시스템에 적합한 표준기상데이터의 제작과 일사량 데이터 분석

-

GHI = DHI + (DNI X Cosθ_zenith)

-

일사량 직산분리 모델에 따른 표준기상연도 데이터와 태양광 발전 예측량의 불확실성

-

(solar zenith angle) + (solar altitude angle) = 90 degrees

-

# 일별 2차 함수 근사를 통해 zenith angle 산출

def obj_curve(x, a, b, c):

return a*(x-b)**2+cdef preprocess_data(data, is_train=True):

temp = data.copy()

## cyclical time feature로 변환하기 위해 전처리

temp.Hour = temp.Hour + temp.Minute/60

temp.drop(['Minute','Day'], axis=1, inplace=True)

## 시계열(시간)을 cyclical encoded time feature로 변환 (add cyclical encoded time feature), continueous time feature임

temp['cos_time'] = np.cos(2*np.pi*(temp.Hour/24))

temp['sin_time'] = np.sin(2*np.pi*(temp.Hour/24))

## add 3day & 5day mean value for target according to Hour

## 가장 마지막 3, 5일 동시간 Target값의 평균

temp['shft1'] = temp['TARGET'].shift(48)

temp['shft2'] = temp['TARGET'].shift(48*2)

temp['shft3'] = temp['TARGET'].shift(48*3)

temp['shft4'] = temp['TARGET'].shift(48*4)

temp['avg3'] = np.mean(temp[['TARGET', 'shft1', 'shft2']].values, axis=-1)

temp['avg5'] = np.mean(temp[['TARGET', 'shft1', 'shft2', 'shft3','shft4']].values, axis=-1)

temp.drop(['shft1','shft2','shft3','shft4'], axis=1, inplace=True)

## 이슬점(결로 현상 발생 지점) feature 계산

c = 243.12

b = 17.62

gamma = (b * (temp['T']) / (c + (temp['T']))) + np.log(temp['RH'] / 100)

dp = ( c * gamma) / (b - gamma)

temp['Td']=dp

# zenith angle의 근사를 통해 GHI 구함

# 1. 일출/일볼 시간 추정 (DHI > 0)

# 2. zenith angle 근사는 연별, 일별 계절 근사로 구함

# 3. GHI 계산 (calculated from DNI DHI and zenith angle)

for day in temp.rolling(window = 48):

if day.values[0][0] == 0 and day.shape[0] == 48:

sun_rise = day[day.DHI > 0]

sun_rise['zenith'] = np.nan

sunrise = sun_rise.Hour.values[0]

sunset = sun_rise.Hour.values[-1]

peak = (sunrise + sunset)/2

param, _ = curve_fit(obj_curve, # 일별 2차 함수 근사를 통해 zenith angle 산출

[sunrise-0.5, peak, sunset+0.5],

[90, (sunrise-6.5)/1.5*25+35, 90],

p0=[0.5, peak, 36],

bounds=([0.01, (sunrise+sunset)/2-1, 10],

[1.2, (sunrise+sunset)/2+1, 65]))

temp.loc[day.index,'zenith']= obj_curve(day.Hour, *param)

## 태양의 zenitn angle 말고 지평선에서부터 계산한 각도

temp['altitude'] = 90 - temp.zenith

temp['GHI'] = temp.DHI + temp.DNI * np.cos(temp.zenith * math.pi / 180)

temp = temp[['Hour','cos_time','sin_time','altitude','GHI','DHI','DNI','WS','RH','T','Td','avg3','avg5','TARGET']]

## 훈련 데이터에 대해서, 컬럼의 맨 마지막 2줄에 target values 를 더함

if is_train==True:

temp['Target1'] = temp['TARGET'].shift(-48)

temp['Target2'] = temp['TARGET'].shift(-48*2)

else:

pass

## 처음 4일은 drop. nan values 들이 채워져 있음

## 훈련 데이터에 대해서, 마지막 2일은 추가적으로 드랍

temp = temp.dropna()

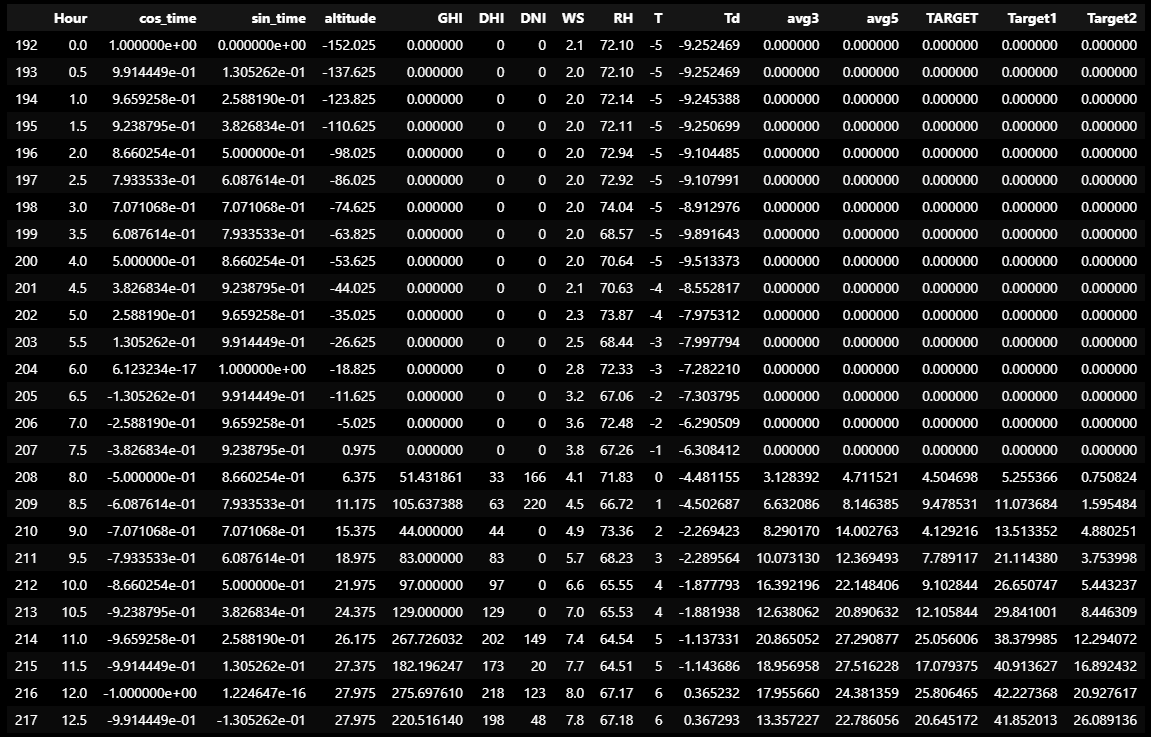

return tempdf_train = preprocess_data(train)

df_train.iloc[:48]

df_train.columns

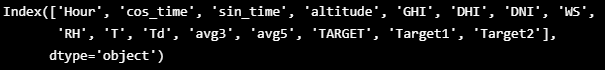

3️⃣ 변수 선택 & 모델 구축

- 데이터 상관관계 확인

f, ax = plt.subplots(figsize=(10,8))

corr = df_train.corr()

sns.heatmap(corr, mask=np.zeros_like(corr, dtype=np.bool),square=True, annot=True, ax=ax)

- day 7과 8 나누어 모델링

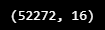

print(df_train.shape)

tf_train=[]

for day in df_train.rolling(48): # rolling(n): n개씩 이동평균 계산

if day.shape[0] == 48 and day.values[0][0] == 0:

day=day.drop(['Td','WS'],axis=1) # 상관관계 낮은거 제거!

tf_train.append(day.values)

tf_train = np.asarray(tf_train) # 원본이 변경될 경우 asarray의 복사본까지 변경

df_test = []

for i in range(81): # 테스트용 데이터는 81개

file_path = './data/test/' + str(i) + '.csv'

temp = pd.read_csv(file_path)

temp = preprocess_data(temp, is_train=False)

temp=temp.drop(['Td','WS'],axis=1)

temp = temp.values[-48:,:]

df_test.append(temp)

tf_test = np.asarray(df_test)

print(tf_train.shape)

print(tf_test.shape)

- 데이터 split

## train & validation split

TF_X_train, TF_X_valid, TF_Y_train_1, TF_Y_valid_1 = train_test_split(tf_train[:,:,:-2], tf_train[:,:,-2], test_size=0.3, shuffle=False, random_state=42)

TF_X_train, TF_X_valid, TF_Y_train_2, TF_Y_valid_2 = train_test_split(tf_train[:,:,:-2], tf_train[:,:,-1], test_size=0.3, shuffle=False, random_state=42)- 모델 넣기 전에 데이터 shape 확인

print('for Day_7')

print(TF_X_train.shape)

print(TF_Y_train_1.shape)

print(TF_X_valid.shape)

print(TF_Y_valid_1.shape, '\n')

print('for Day_8')

print(TF_X_train.shape)

print(TF_Y_train_2.shape)

print(TF_X_valid.shape)

print(TF_Y_valid_2.shape)

4️⃣ Model building and selection

- 4 가지(MLP, Conv1D CNN, LSTM, CNN LSTM) 모델

- Conv1D CNN : 인접 데이터와의 상관 관계를 학습 + BatchNormalization

- 시계열 학습에 적합하다고 알려진 LSTM

- CNN과 LSTM 장점 합친 CNN(w/ BN)-LSTM

- 기본 MLP + Dropout

- Quantile 및 예측 일 별(day 7, 8)

- 총 4(모델) 9(quantile) 2(예측 일)=72개 생성

- optimizer

- rectified adam(RAdam)과 LookAhead를 결합

- rectified adam(RAdam)

- 안정적으로 산(손실)을 타고 내려가게 초반 길잡이 역할

- 현재 데이터 세트에 맞춤화된 자동화된 워밍업을 효과적으로 제공하여 안정적인 훈련 시작을 보장

- LookAhead

- 높은 곳에서 관찰하다가 잘못되면 윗쪽으로 올려주는 도우미 역할

- 최소한의 계산 오버헤드로 다양한 딥 러닝 작업에서 더 빠른 수렴

- => 매 정상 지점이 업데이트될 때마다, 안정적으로 초반 학습을 지속적으로 수행

- rectified adam(RAdam)

- rectified adam(RAdam)과 LookAhead를 결합

## Lookahead(RAdam), Adam, SGD 비교

def get_opt(init_lr=5e-4):

RAdam = tfa.optimizers.RectifiedAdam(learning_rate=init_lr)

opt = tfa.optimizers.Lookahead(RAdam)

#opt = tf.keras.optimizers.Adam(learning_rate=init_lr)

#opt = tf.keras.optimizers.SGD(learning_rate=init_lr)

return opt

## 규정된 Pinball loss 만들어서 사용

from tensorflow.keras.backend import mean, maximum

def quantile_loss(q, y, f):

err = (y-f)

return mean(maximum(q*err, (q-1)*err), axis=-1)- CNN

## CNN model structure

def CNN(q, X_train, Y_train, X_valid, Y_valid, X_test):

inputs = tf.keras.layers.Input(shape=(X_train.shape[1],X_train.shape[2]), name='input')

norm = tf.keras.layers.experimental.preprocessing.Normalization() # normalization

norm.adapt(X_train)

norm_data = norm(inputs)

x = tf.keras.layers.Conv1D(100, 3, activation='relu', kernel_initializer='he_normal')(norm_data) # 가중치 초기화 -> He 정규분포 초기값 설정기

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Conv1D(80, 3, activation='relu', kernel_initializer='he_normal')(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Conv1D(50, 3, activation='relu', kernel_initializer='he_normal')(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Conv1D(30, 3, activation='relu', kernel_initializer='he_normal')(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Flatten()(x)

x = tf.keras.layers.Dropout(0.7)(x)

x= tf.keras.layers.Dense(Y_train.shape[-1])(x)

x1= tf.keras.layers.Flatten()(x)

model = tf.keras.models.Model(inputs=inputs, outputs=x1)

tf.keras.utils.plot_model(model, show_shapes=True)

# model.summary()

model.compile(loss=lambda y,f: quantile_loss(q,y,f), optimizer=get_opt())

es = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=20, restore_best_weights=True, verbose=1)

history = model.fit(X_train, Y_train, epochs=500, batch_size=16, shuffle=True, validation_data=[X_valid, Y_valid], callbacks=[es], verbose=0)

train_score = np.asarray(quantile_loss(q,Y_train,model.predict(X_train))).flatten().mean()

val_score = np.asarray(quantile_loss(q,Y_valid,model.predict(X_valid))).flatten().mean()

print("CNN_train_score: ", train_score, '\n', 'CNN_val_score: ', val_score)

pred = np.asarray(model.predict(X_test))

return pred, model, val_score- LSTM

def LSTM(q, X_train, Y_train, X_valid, Y_valid, X_test):

inputs = tf.keras.layers.Input(shape=(X_train.shape[1],X_train.shape[2]), name='input')

norm = tf.keras.layers.experimental.preprocessing.Normalization()

norm.adapt(X_train)

norm_data = norm(inputs)

x =tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(32, kernel_initializer='he_normal', recurrent_dropout=0.3, return_sequences=True))(norm_data)

x =tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(16, kernel_initializer='he_normal', recurrent_dropout=0.3, return_sequences=True))(x)

x= tf.keras.layers.Dense(1)(x)

x1= tf.keras.layers.Flatten()(x)

model = tf.keras.models.Model(inputs=inputs, outputs=x1)

tf.keras.utils.plot_model(model, show_shapes=True)

model.compile(loss=lambda y,f: quantile_loss(q,y,f), optimizer=get_opt(1e-2))

# model.summary()

es = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=20, restore_best_weights=True, verbose=1)

history = model.fit(X_train, Y_train, epochs=500, batch_size=16, shuffle=True, validation_data=[X_valid, Y_valid], callbacks=[es], verbose=0)

train_score = np.asarray(quantile_loss(q,Y_train,model.predict(X_train))).flatten().mean()

val_score = np.asarray(quantile_loss(q,Y_valid,model.predict(X_valid))).flatten().mean()

print("LSTM_train_score: ", train_score, '\n', 'LSTM_val_score: ', val_score)

pred = np.asarray(model.predict(X_test))

return pred, model, val_score- CNN-LSTM

- Bidirectional LSTM 은 이전 데이터 뿐만 아니라 다음의 데이터를 통해 이전에 뭐가 나올지 예측하는 모델

- 기존의 RNN은 한 방향(앞 -> 뒤)으로의 순서만 고려하였지만, 양방향 RNN은 역방향으로의 순서도 고려

def CNN_LSTM(q, X_train, Y_train, X_valid, Y_valid, X_test):

inputs = tf.keras.layers.Input(shape=(X_train.shape[1],X_train.shape[2]), name='input')

norm = tf.keras.layers.experimental.preprocessing.Normalization()

norm.adapt(X_train)

norm_data = norm(inputs)

x = tf.keras.layers.Conv1D(100, 3, activation='relu', padding='same',kernel_initializer='he_normal')(norm_data)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Conv1D(80, 3, activation='relu', padding='same',kernel_initializer='he_normal')(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Conv1D(50, 3, activation='relu', padding='same',kernel_initializer='he_normal')(x)

x = tf.keras.layers.BatchNormalization()(x)

x = tf.keras.layers.Conv1D(30, 3, activation='relu', padding='same',kernel_initializer='he_normal')(x)

x = tf.keras.layers.BatchNormalization()(x)

# Bidirectional LSTM 은 이전 데이터 뿐만 아니라 다음의 데이터를 통해 이전에 뭐가 나올지 예측하는 모델

# 양방향 RNN -> 기존의 RNN은 한 방향(앞 -> 뒤)으로의 순서만 고려하였다고 볼 수도 있다. 반면, 양방향 RNN은 역방향으로의 순서도 고려

x =tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(20, kernel_initializer='he_normal', return_sequences=True,recurrent_dropout=0.3))(x)

x =tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(10, kernel_initializer='he_normal', return_sequences=True,recurrent_dropout=0.3))(x)

x= tf.keras.layers.Dense(1)(x)

x1= tf.keras.layers.Flatten()(x)

model = tf.keras.models.Model(inputs=inputs, outputs=x1)

tf.keras.utils.plot_model(model, show_shapes=True)

model.compile(loss=lambda y,f: quantile_loss(q,y,f), optimizer=get_opt(1e-2))

es = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=20, restore_best_weights=True, verbose=1)

history = model.fit(X_train, Y_train, epochs=500, batch_size=16, shuffle=True, validation_data=[X_valid, Y_valid], callbacks=[es], verbose=0)

train_score = np.asarray(quantile_loss(q,Y_train,model.predict(X_train))).flatten().mean()

val_score = np.asarray(quantile_loss(q,Y_valid,model.predict(X_valid))).flatten().mean()

print("CNN-LSTM_train_score: ", train_score, '\n', 'CNN-LSTM_val_score: ', val_score)

pred = np.asarray(model.predict(X_test))

return pred, model, val_score- MLP

def MLP(q, X_train, Y_train, X_valid, Y_valid, X_test):

inputs = tf.keras.layers.Input(shape=(X_train.shape[1],X_train.shape[2]), name='input')

norm = tf.keras.layers.experimental.preprocessing.Normalization()

norm.adapt(X_train)

norm_data = norm(inputs)

x = tf.keras.layers.Flatten()(norm_data)

x = tf.keras.layers.Dense(100, activation='relu', kernel_regularizer=tf.keras.regularizers.l2(1e-3))(x)

x = tf.keras.layers.Dropout(0.7)(x)

x = tf.keras.layers.Dense(100, activation='relu', kernel_regularizer=tf.keras.regularizers.l2(1e-3))(x)

x = tf.keras.layers.Dropout(0.7)(x)

x = tf.keras.layers.Dense(100, activation='relu', kernel_regularizer=tf.keras.regularizers.l2(1e-3))(x)

x = tf.keras.layers.Dropout(0.7)(x)

x = tf.keras.layers.Dense(100, activation='relu', kernel_regularizer=tf.keras.regularizers.l2(1e-3))(x)

x = tf.keras.layers.Dropout(0.7)(x)

x1= tf.keras.layers.Dense(Y_train.shape[-1])(x)

model = tf.keras.models.Model(inputs=inputs, outputs=x1)

tf.keras.utils.plot_model(model, show_shapes=True)

model.compile(loss=lambda y,f: quantile_loss(q,y,f), optimizer=get_opt())

es = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=20, restore_best_weights=True, verbose=1)

history = model.fit(X_train, Y_train, epochs=500, batch_size=16, shuffle=True, validation_data=[X_valid, Y_valid], callbacks=[es], verbose=0)

train_score = np.asarray(quantile_loss(q,Y_train,model.predict(X_train))).flatten().mean()

val_score = np.asarray(quantile_loss(q,Y_valid,model.predict(X_valid))).flatten().mean()

print("MLP_train_score: ", train_score, '\n', 'MLP_val_score: ', val_score)

pred = np.asarray(model.predict(X_test))

return pred, model, val_score- train and predict Test data

def TF_train_func(X_train, Y_train, X_valid, Y_valid, X_test):

models=[]

actual_pred = []

for model_select in ['CNN','MLP','LSTM','CNNLSTM']:

score_lst=[]

for q in [0.1, 0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9]:

print(model_select, q)

if model_select=='LSTM':

pred , mod, s = LSTM(q, X_train, Y_train, X_valid, Y_valid, X_test)

elif model_select=='CNN':

pred , mod, s = CNN(q, X_train, Y_train, X_valid, Y_valid, X_test)

elif model_select=='CNNLSTM':

pred , mod, s = CNN_LSTM(q, X_train, Y_train, X_valid, Y_valid, X_test)

elif model_select=='MLP':

pred , mod, s = MLP(q, X_train, Y_train, X_valid, Y_valid, X_test)

score_lst.append(s)

models.append(mod)

actual_pred.append(pred)

print(sum(score_lst)/len(score_lst))

return models, np.asarray(actual_pred)- train

import keras

import pydot

import pydotplus

from pydotplus import graphviz

from keras.utils.vis_utils import plot_model## total of 4*9*2 models are trained(4 models, 9 quantiles, 2 seperate target days)

models_tf1, results_tf1 = TF_train_func(TF_X_train, TF_Y_train_1 , TF_X_valid, TF_Y_valid_1, tf_test)

models_tf2, results_tf2 = TF_train_func(TF_X_train, TF_Y_train_2 , TF_X_valid, TF_Y_valid_2, tf_test)

Step 1 : pip install pydot

Step 2 : pip install pydotplus

Step 3 : sudo apt-get install graphviz

다 설치하였으나,,,,, 에러가 뜬다 ㅜㅜㅜ

결과 궁금한데..ㅠㅠ

앗.. 기다렸더니 서서히 나오고 있다. 굉장히 오래 걸릴듯.

아직 수정중~

728x90

반응형

'👩💻 인공지능 (ML & DL) > Serial Data' 카테고리의 다른 글

| [논문리뷰] Comparison between ARIMA and Deep Learning Modelsfor Temperature Forecasting (1) | 2022.09.15 |

|---|---|

| tsod: Anomaly Detection for time series data (0) | 2022.09.15 |

| 시계열 모델 ARIMA 2 (자기회귀 집적 이동 평균) (0) | 2022.09.08 |

| [DACON] HAICon2020 산업제어시스템 보안위협 탐지 AI & 비지도 기반 Autoencoder (0) | 2022.09.08 |

| [이상 탐지] ML for Time Series & windows (0) | 2022.09.07 |

Comments