😎 공부하는 징징알파카는 처음이지?

[논문리뷰] Time Series Forecasting (TSF) Using Various Deep Learning Models 본문

[논문리뷰] Time Series Forecasting (TSF) Using Various Deep Learning Models

징징알파카 2022. 9. 19. 15:18220919 작성

<본 블로그는 Jimeng Shi, Mahek Jain, Giri Narasimhan 님의 논문을 참고해서 공부하며 작성하였습니다 :-) >

https://arxiv.org/abs/2204.11115

Time Series Forecasting (TSF) Using Various Deep Learning Models

Time Series Forecasting (TSF) is used to predict the target variables at a future time point based on the learning from previous time points. To keep the problem tractable, learning methods use data from a fixed length window in the past as an explicit inp

arxiv.org

🟣 Abstract

- 시계열 예측(TSF)은 이전 시점으로부터의 학습을 기반으로 미래의 시점에서 목표 변수를 예측하는 데 사용

- 문제를 다루기 쉽게 유지하기 위해 학습 방법은 과거에 고정된 길이 창의 데이터를 명시적 입력으로 사용

- 딥 러닝 방법(RNN, LSTM, GRU 및 Transformer)을 기준 방법과 함께 비교

- Transformer 모델이 최고의 성능을 가지고 있다

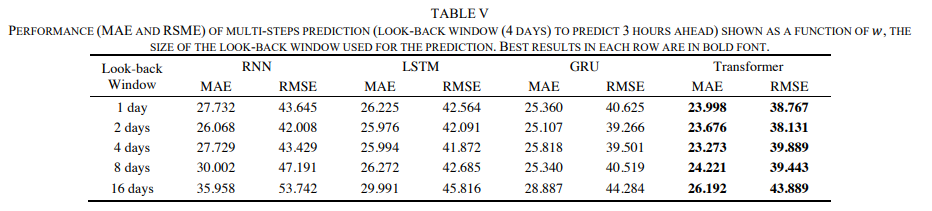

- 대부분의 단일 단계 및 다중 단계 예측에서 가장 낮은 평균 오차(MAE = 14.599, 23.273)

- 루트 평균 제곱 오차(RSME = 23.573, 38.165)

- 1시간 후를 예측하기 위한 룩백 윈도우의 가장 좋은 크기는 하루인 반면 2일 또는 4일

- 미래를 3시간 예측하기 위해 최선을 다함

1️⃣ INTRODUCTION

- TIME 영상 시리즈는 특정 기간 동안 주어진 γ 변수 집합의 반복 관측치 시퀀스

- EX) 주가, 강수량, 교통량, 통신, 운송 네트워크 등

- 모델들은 시간 시리즈 포착하는 데 3가지로 나눌 수 있음

- 전통적인 모델

- 선형

- Autoregressive Moving Average (ARMA)

- Autoregressive Integrated Moving Average (ARIMA)

- 비선형

- Autoregressive Fractionally Integrated Moving Average (ARFIMA)

- Seasonal Autoregressive Integrated Moving Average (SARIMA)

- 한계

- 예측을 생성하기 위해 가장 최근의 과거 데이터에서 고정된 요인 집합에 회귀를 적용

- 전통적인 방법은 반복적이며 종종 프로세스가 시드되는 방식에 민감

- 정상성은 엄격한 조건이며, 드리프트, 계절성, 자기상관성, 이질성만을 다루는 것만으로는 휘발성 시계열의 정상성을 달성하기 어려움

- 선형

- 기계 학습 모델

- 서포트 벡터 머신(SVM)

- 순환 신경망(RNN)

- 장단기 메모리(LSTM)

- Transformers라고 불리는 주의 기반 방법

- 딥러닝 모델

- 인공 신경망(ANN)

- 딥 러닝 NN

- 목표

- (1) 시계열 예측을 위한 딥 러닝 모델(RNN, LSTM, GRU, Transformer)을 적용, 검증하고 해당 성능을 비교

- (2) 이러한 모델의 강점과 약점을 평가

- (3) 룩백 창의 크기와 미래 예측 시간의 길이가 미치는 영향을 이해

- (4) 지정된 미래 시간에 최상의 예측을 위해 사용할 최적의 룩백 창 크기를 정확히 파악

- 전통적인 모델

2️⃣ METHODOLOGY

- 과거 데이터를 사용하여 딥 러닝 모델은 대상 변수의 입력 기능과 미래 값 사이의 기능적 관계를 학습

- 결과 모델은 미래 시점에 목표 변수에 대한 예측을 제공

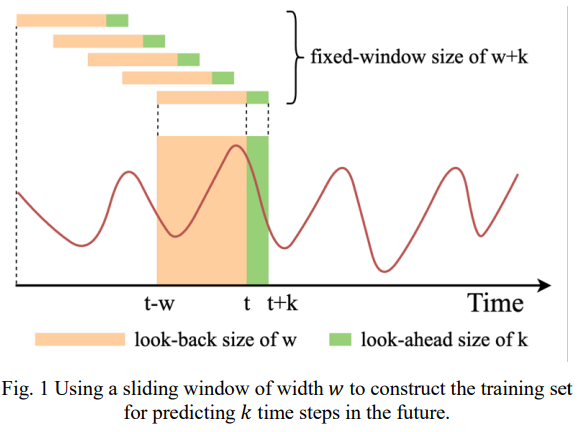

- 균일한 길이의 모델에 입력하기 위해 그림 1과 같이 θ 크기의 고정 길이 슬라이딩 시간 창을 사용

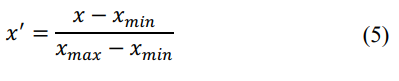

- 식 (5)을 이용한 최소-최대 스케일링으로 데이터를 변환

- 수학적으로 기계 학습 모델에 의해 학습된 함수 관계는 Eq와 같이 적을 수 있음

- 여기서 y^(t+k)은 시간 t+k에 대한 목표 변수 예측값

- k 는 목표 변수가 예측될 미래까지의 시간 길이

- t-w to t-1 은 관측된 목표값

- x(t-w) to x(t-1)은 (t-w) to (t-1) 까지의 observed 관측된 입력 특징의 벡터

- f(k) 는 딥러닝 모델에 의해 학습된 함수

- m 은 입력 피쳐의 수

- w 는 입력으로 사용되는 창의 크기

3️⃣ DEEP LEARNING FRAMEWORKS

이 작업에 사용되는 심층 학습 모델, 즉 반복 신경망(RNN), 장단기 메모리(LSTM), 게이트 순환 장치(GRU) 및 트랜스포머에 대해 간략하게 설명한다

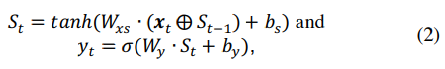

💗 A. Recurrent Neural Networks(RNN)

- RNN은 시계열 데이터 모델링에 가장 적합

- 신경망을 사용하여 최근 입력 기능과 미래의 목표 변수 사이의 기능 관계를 모델링

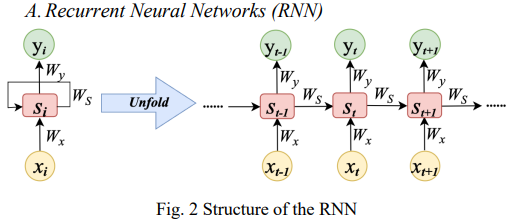

- 그림 2에 나타난 바와 같이, RNN은 현재 t - 1에서 t로 내부(숨겨진) 상태의 전환에 초점을 맞추어 과거 데이터의 훈련 세트에서 학습

- 결과 모델은 모델을 정의하는 데 도움이 되는 세 개의 매개 변수 행렬 w(x), w(y), w(s)

- 두 개의 바이어스 벡터 b(s) 및 b(y) 에 의해 결정

- 출력 y(t)는 내부 상태 s(t)에 따라 달라지며, 이는 현재 입력 x(t)와 이전 상태(t-1) 모두에 따라 달라짐

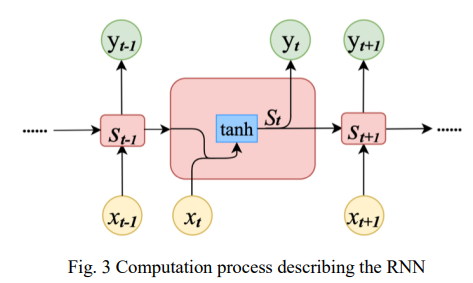

- 각각의 은닉 상태(은닉 단위 또는 은닉 셀)의 연산 과정을 그림 3에 나타냄

- R^N : 내부 상태와 출력에 대한 bias 벡터

- σ : sigmoid activation func

- S(t) : internal (hidden) state

- RNN의 가장 큰 단점은 반복 가중치 행렬의 반복 곱셈으로 인해 기울기 소실 문제로 어려움을 겪음

- 시간이 지남에 따라 기울기가 너무 작아지고 RNN이 짧은 시간 동안만 정보를 기억하게 되기 때문

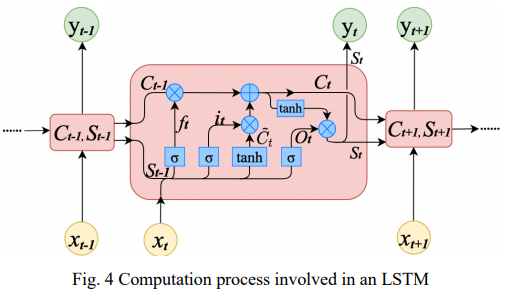

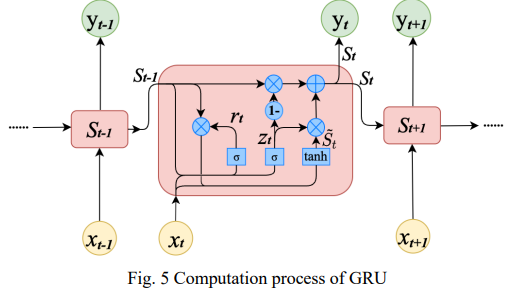

💗 B.Long Short-term Model (LSTM)

- LSTM(Long Short-Term Memory) 네트워크는 사라지는 그레이디언트 문제를 부분적으로 해결하고 시계열 데이터에서 장기 의존성을 학습하는 RNN의 변형

- 시간 t에서 내부(숨겨진) 상태 s(t), cell 상태, c(t) 으로 묘사됨

- 그림 4 처럼 C(t)에는 세 가지 다른 종속성이 있음

- (1) 이전 셀 상태, C(t-1)

- (2) 이전 내부 상태, S(t-1)

- (3) 현재 시점에서 입력, x(t)

- 그림 4에 표시된 과정은 forget gate, input gate, addition gate, output gate를 이용한 정보의 removal/filtering, multiplication/combining 및 addition 가 가능하여 각각 f(t), i(t) C~(t), O(t) 기능을 구현하여 장기 의존성 학습을 보다 세밀하게 제어

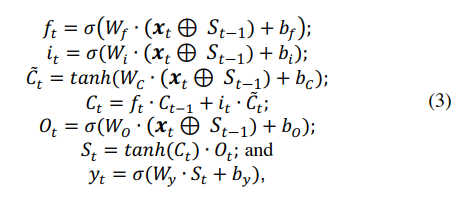

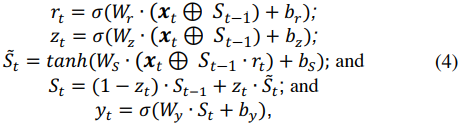

💗 C.Gated Recurrent Unit (GRU)

- 게이트 순환 장치(GRU)는 사라지는 경사 문제를 추가로 해결하기 위한 LSTM의 변형

- 그림 5 같이, 이 방법의 신규성은 각각 z(t), r(t) 및 s~(t) 기능을 구현한 업데이트 게이트, 리셋 게이트 및 제3 게이트를 사용하는 것

- 각 게이트는 사전 정보를 필터링, 사용 및 결합하는 방법을 제어하는 데 서로 다른 역할

- (1 - z(t)) • S(t-1)에 의해 주어진 다음 상태에 대한 식의 첫 번째 용어는 과거로부터 무엇을 유지할지를 결정

- z(t) • S~(t)는 현재 메모리 내용에서 무엇을 수집할지를 결정

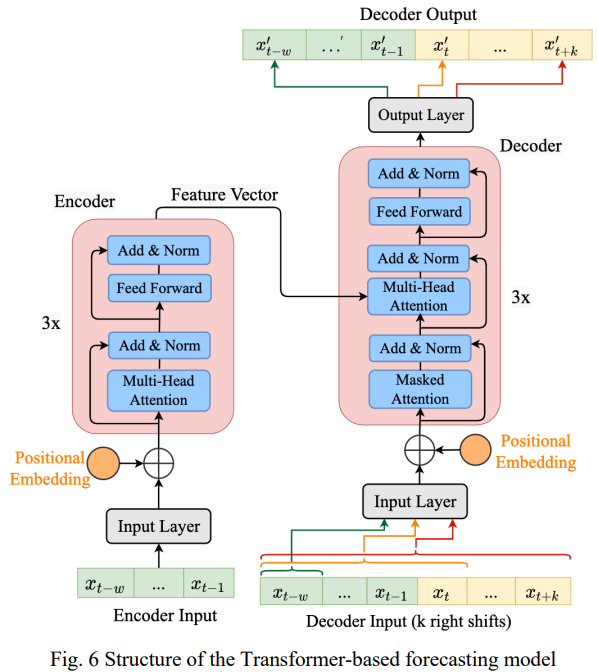

💗 D.Transformer Model

- LSTMs과 GRUs 부분적으로 RNNs의 사라지고 있는 경도 문제를 다루고 있음

- But, 활성화 함수로 쌍곡선 접선과 시그모이드 함수의 사용은 더 깊은 층에서 기울기 붕괴를 계속 일으킴

- transformer networks 는 선택적으로 과거로부터 중요한 정보를 대 무게 허용하는 관심 기능의 사용 때문에 시간 시리즈에 대한 최상의 성능을 것으로 알려져 있움

- 그림 6은 transformer networks 의 schematic 를 보여줌

- 인코더와 디코더라는 부분으로 구성

- w 은 look-back window 창 크기

- k 는 향후 예측해야 할 단계 수.

- 디코더 부분은 디코더에 마스크드 어텐션(Masked Attention) 메커니

- 디코더의 특징 벡터가 될 인코더 출력 중에서 선택하는 멀티 리드 어텐션(Multi-ead Attention) 메커니즘 가짐

- Transformer 는 recurrent 네트워크가 아니지만 positional encoding을 사용하여 데이터의 시간적 순서를 표시

- 인코더는 w 크기의 look-back window 로부터 데이터를 제공하고 디코더가 사용할 피처 벡터를 출력

- 훈련 중에 디코더는 인코더의 출력과 함께 모델링될 것으로 예상되는 미래 데이터도 제공

- transformer networks 의 주의 기능은 중요한 특징과 과거의 동향에 주의를 기울이는 법을 배우는 데 도움

4️⃣ DATA AND EXPERIMENTS

- 우리는 UCI 웹사이트의 베이징 대기질 데이터 세트에 네 가지 기계 학습 기법을 적용하여 대기질 예측을 위한 시계열 예측(TSF)을 수행

- 두 가지 유형의 실험을 수행했는데

- 하나는 이전 시점의 데이터를 사용하여 다음 시점을 예측하는 "싱글 스텝"

- 다른 하나는 이전 시점의 데이터를 사용하여 다음 여러 시점을 예측하는 "멀티 스텝"

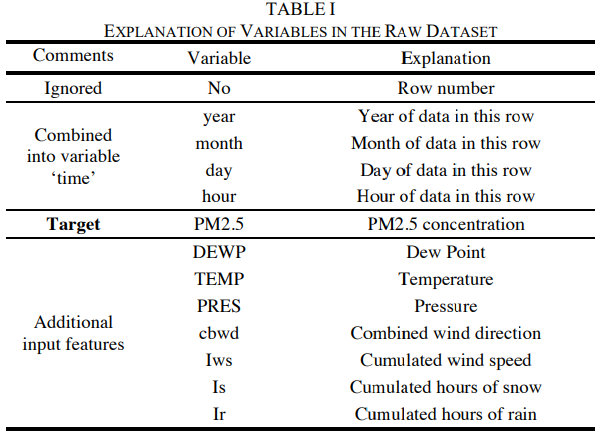

💕 A.Dataset

- 사용한 데이터 세트는 UCI 웹사이트의 시간당 베이징 대기 품질 데이터 세

- 2010년 1월 1일부터 2014년 12월 31일까지 5년 동안의 데이터가 포함

- 매시간 수집되었으며 데이터 집합에는 43,824개의 행과 13개의 열

- 첫 번째 열은 단순한 인덱스이며 분석에서 무시

- 년, 월, 일 및 시간으로 표시된 네 개의 열은 "년-월-일-시간"이라는 단일 기능으로 결합

- 'PM2.5' 열이 대상 변수

- 다른 모든 변수(시간과 함께)가 입력 기능으로 사용

- 열 이름과 설명은 표 I에 명시

- 시간과 'cbwd'를 제외한 모든 입력 및 대상 피쳐에 대한 시계열이 그림 7에 표시

- 데이터 누락으로 인해 일부 행(43,824개 중 24개)이 폐기

- 원핫 임베딩은 풍향의 범주적 특징에 적용

- 데이터는 Min-Max 정규화 기법을 사용하여 [0, 1] 범위로 정규화

- 데이터는 교육 세트(첫 번째 70% 행)와 테스트 세트(마지막 30% 행)로 구분

💕 B.Experiments

- k = 1을 사용한 실험은 미래에 대한 한 번의 단계를 예측하고 단일 단계 예측

- k > 1을 사용한 실험은 미래에 대한 하나 이상의 시점을 예측하고 다단계 예측

- 두 실험 모두 입력으로 사용된 최근 과거의 부분을 나타내는 look-back window 의 다른 값으로 수행되었다.

- 1, 2, 4, 8, 16일의 창 크기가 실험에 모두 사용

- 창 크기가 예측 정확도에S 미치는 영향을 이해하기 위해 창 크기의 지수 선택이 선택

- 다단계 예측은 향후 1, 2, 4, 8, 16시간 시점의 대기질 값을 예측하는 데 사용

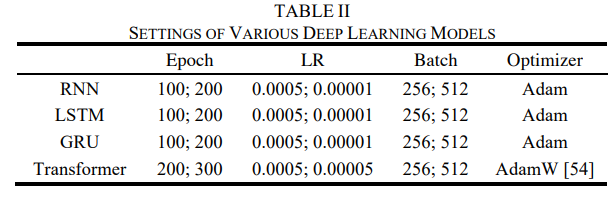

- 4개의 딥 러닝 모델 각각에 대해 표 II에 표시된 것처럼 서로 다른 하이퍼 파라미터 설정을 시도

- 학습 속도(0.00001, 0.00005, 0.0001, 0.0005, 0.001)

- 배치 크기(128, 256, 512)

- 옵티마이저(Adam, SGD)

💕 C.Measures of Evaluation

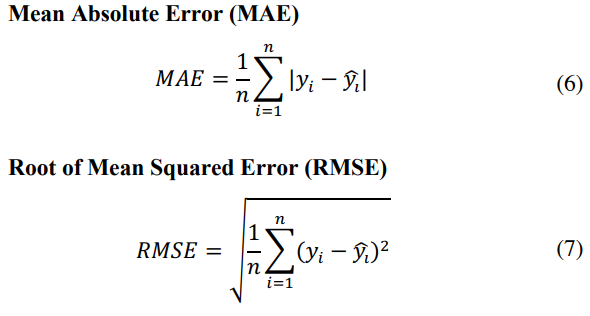

- 손실 함수로 평균 제곱 오차(MSE)를 사용

- 훈련 및 테스트 손실은 다음과 같은 함수로 계산

- 과적합 가능성을 감지하는 에폭스

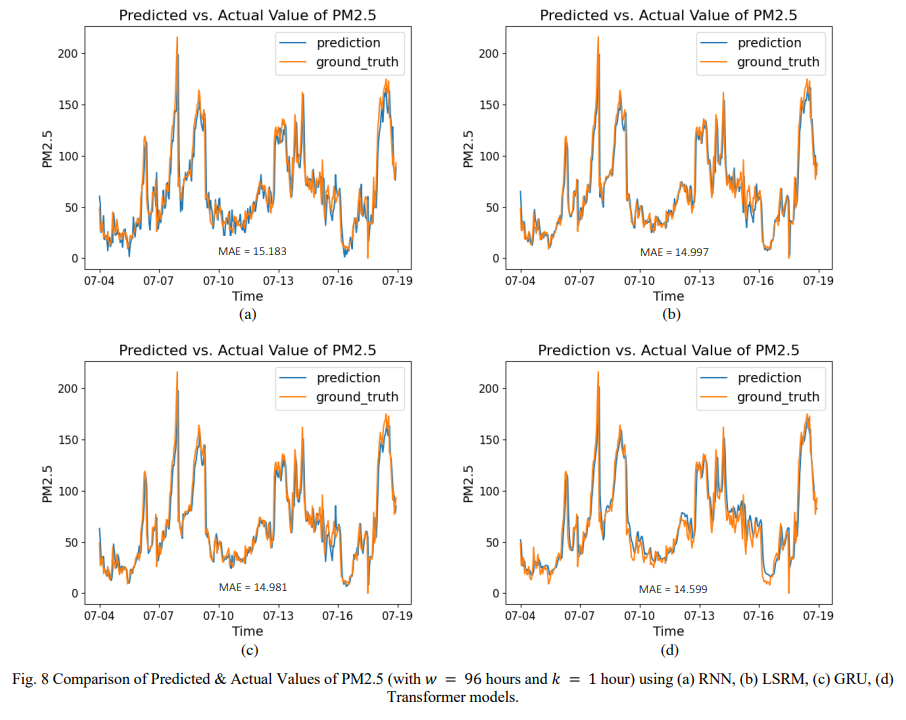

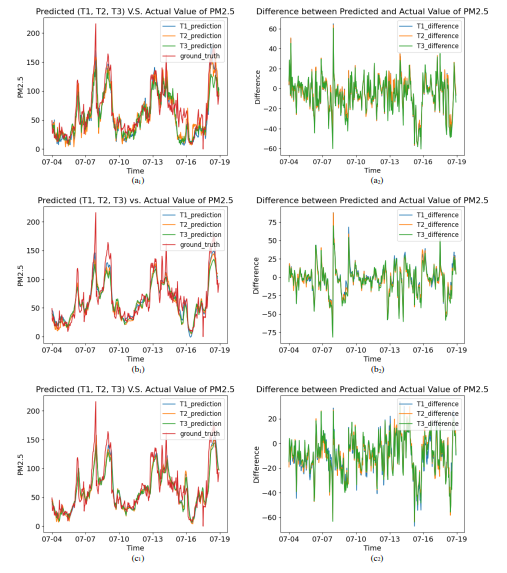

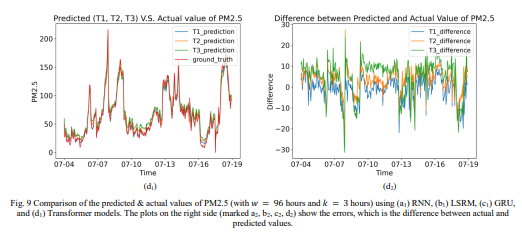

- 그림 8, 9는 2013-07-04-09:00부터 2013-07-19-08:00까지 단기간 대기질 예측 및 관측치를 나타낸 것

- 평균 절대 오차(MAE)와 루트 평균 제곱 오차(RMSE)는 배가 바다 밑으로 가라앉은 것을 보여주는 표준 공식을 사용하여 계산

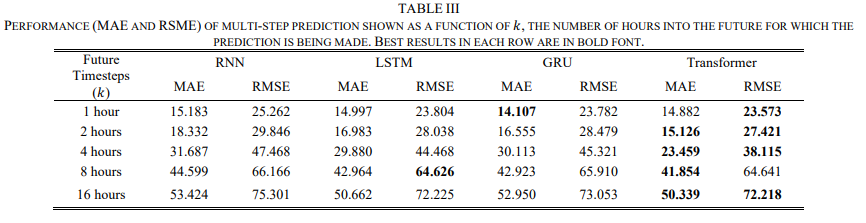

✔ A.Predict Multiple Timesteps Ahead

- 고정된 look-back window 크기에 대해 시계열 값을 예측하는 미래의 시간인 k 값을 늘리면 모델 성능이 어떻게 저하되는지 조사

- 요구 사항이 증가함에 따라 성능이 저하될 것으로 예상해도 무방

- TABLE III의 각 열에 MAE 및 RMSE 값이 k에 따라 증가한다는 사실에 의해 확인

- transformer models 은 실험의 80%에서 RNN, LSTM 및 GRU보다 성능이 우수

- 미래를 4시간 이상 예측해야 할 경우 예측 성능이 급격히 떨어짐

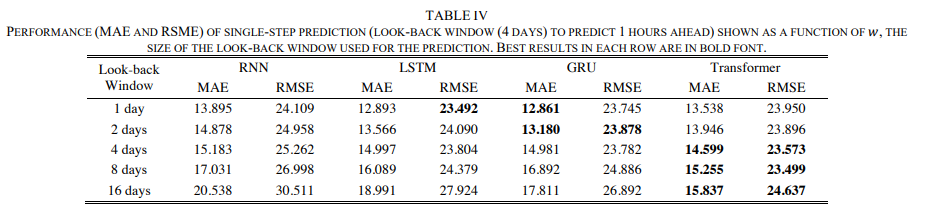

✔ B.Different Look-back Window Sizes

- 단일 단계 및 다중 단계 예측의 성능이 Look-back Window 크기에 의해 어떻게 영향을 받는지 조사

- 실험은 w = 24시간, 48시간, 96시간, 192시간, 384시간으로 수행

- Single-step predictions : 표 IV는 우리의 실험 결과를 요약

- transformer network model 은 w( 96 96시간)의 더 큰 값에 대해 다른 방법보다 성능이 우수

- attention 기반 접근 방식의 알려진 강점과 일치

- 더 작은 창 크기(24시간 또는 48시간)의 경우, GRU와 LSTM이 RNN보다 더 나은 성능

- 이는 GRU와 LSTM이 RNN보다 더 긴 메모리를 가지고 있으며 부분적으로 사라지는 기울기 문제를 해결했다는 주장과 일치

- Single-step predictions의 경우 작은 창 크기만 선택할 수 있는 경우 LSTM과 GRU가 더 나은 선택

- 불행히도, Transformer 네트워크는 창이 더 클수록 소음 수준이 증가하기 때문에 훨씬 더 큰 창 크기를 사용하더라도 더 나은 성능을 제공하지 못함

- 게다가, 더 작은 창들은 더 효율적인 방법들로 이어질 가능성이 있음

- 이전 시점의 시계열 값만 보고하는 예측에 대한 단순한 기준선 접근 방식은 각각 16.624와 26.828의 MAE 값을 가짐

- Multi-step predictions : 표 IV는 w의 다양한 값에 대해 k = 3시간 후를 예측하는 실험 결과를 보여줌

- transformer network model 는 다른 모든 도구보다 성능이 우수

- 최소값이 w = 48 또는 96시간 동안 도달하기 때문에 성능 변화는 단조롭지 않으며, 이는 학습 방법에 대한 최적의 값일 수 있음을 시사

- 그림 9는 실험에 대한 예측을 시각화

- T1, T2, T3 곡선은 k = 1, 2, 3시간에 대한 실험 결과를 나타냄

5️⃣ CONCLUSIONS

- 네 가지 다른 딥 러닝 모델을 사용한 실험에서 얻은 결론은 다음과 같이 요약

- Transformer network models 은 더 먼 미래를 예측할 때 가장 잘 수행

- LSTM과 GRU는 단기 예측에서 RNN을 능가

- lookback window 크기에 대한 성능 의존성의 경우 로컬 최소값이 표시

- single-step predictions 의 경우 창 크기의 최적 값은 w = 24시간

- 다단계 예측의 경우 최적 값은 w = 48 또는 96시간 (k = 3시간 전 예측 시)

- multi-step predictions 의 경우 변압기가 다른 방법보다 성능이 우수

- 단일 단계 예측의 경우, 변환기는 조회 창이 더 긴 경우에만 성능이 우수

- Transformer network models 은 더 먼 미래를 예측할 때 가장 잘 수행

'👩💻 인공지능 (ML & DL) > Serial Data' 카테고리의 다른 글

| LSTM(+GRU)을 이용한 삼성전자(+NAVER) 주가 예측하기 (2) | 2022.09.22 |

|---|---|

| [Kaggle] Time-series data analysis using LSTM (1) | 2022.09.20 |

| 다양한 유형의 Time series forecasting model (시계열 데이터) (1) | 2022.09.19 |

| [Kaggle] Smart Home Dataset with weather Information (1) | 2022.09.16 |

| [Kaggle] Web traffic time series forecast (0) | 2022.09.16 |